USENIX Security '20: Defending Against Image-Scaling Attacks in Machine Learning 🛡️

Explore how adversarial preprocessing can help understand and prevent malicious image-scaling attacks that threaten machine learning systems. Learn about innovative techniques from leading researchers to enhance AI security.

USENIX

817 views • Sep 14, 2020

About this video

Adversarial Preprocessing: Understanding and Preventing Image-Scaling Attacks in Machine Learning

Erwin Quiring, David Klein, Daniel Arp, Martin Johns, and Konrad Rieck, TU Braunschweig

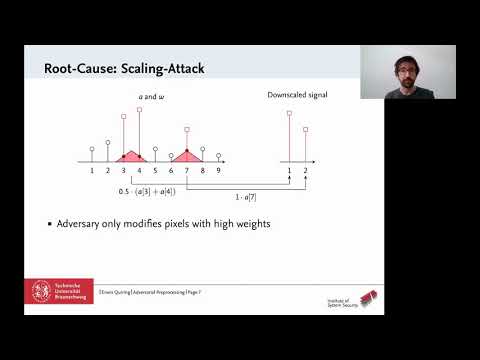

Machine learning has made remarkable progress in the last years, yet its success has been overshadowed by different attacks that can thwart its correct operation. While a large body of research has studied attacks against learning algorithms, vulnerabilities in the preprocessing for machine learning have received little attention so far. An exception is the recent work of Xiao et al. that proposes attacks against image scaling. In contrast to prior work, these attacks are agnostic to the learning algorithm and thus impact the majority of learning-based approaches in computer vision. The mechanisms underlying the attacks, however, are not understood yet, and hence their root cause remains unknown.

In this paper, we provide the first in-depth analysis of image-scaling attacks. We theoretically analyze the attacks from the perspective of signal processing and identify their root cause as the interplay of downsampling and convolution. Based on this finding, we investigate three popular imaging libraries for machine learning (OpenCV, TensorFlow, and Pillow) and confirm the presence of this interplay in different scaling algorithms. As a remedy, we develop a novel defense against image-scaling attacks that prevents all possible attack variants. We empirically demonstrate the efficacy of this defense against non-adaptive and adaptive adversaries.

View the full USENIX Security '20 program at https://www.usenix.org/conference/usenixsecurity20/technical-sessions

Erwin Quiring, David Klein, Daniel Arp, Martin Johns, and Konrad Rieck, TU Braunschweig

Machine learning has made remarkable progress in the last years, yet its success has been overshadowed by different attacks that can thwart its correct operation. While a large body of research has studied attacks against learning algorithms, vulnerabilities in the preprocessing for machine learning have received little attention so far. An exception is the recent work of Xiao et al. that proposes attacks against image scaling. In contrast to prior work, these attacks are agnostic to the learning algorithm and thus impact the majority of learning-based approaches in computer vision. The mechanisms underlying the attacks, however, are not understood yet, and hence their root cause remains unknown.

In this paper, we provide the first in-depth analysis of image-scaling attacks. We theoretically analyze the attacks from the perspective of signal processing and identify their root cause as the interplay of downsampling and convolution. Based on this finding, we investigate three popular imaging libraries for machine learning (OpenCV, TensorFlow, and Pillow) and confirm the presence of this interplay in different scaling algorithms. As a remedy, we develop a novel defense against image-scaling attacks that prevents all possible attack variants. We empirically demonstrate the efficacy of this defense against non-adaptive and adaptive adversaries.

View the full USENIX Security '20 program at https://www.usenix.org/conference/usenixsecurity20/technical-sessions

Tags and Topics

Browse our collection to discover more content in these categories.

Video Information

Views

817

Likes

11

Duration

11:15

Published

Sep 14, 2020

Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.