Mastering Retrieval Augmented Generation (RAG): Embeddings, Sentence BERT & Vector Databases 🚀

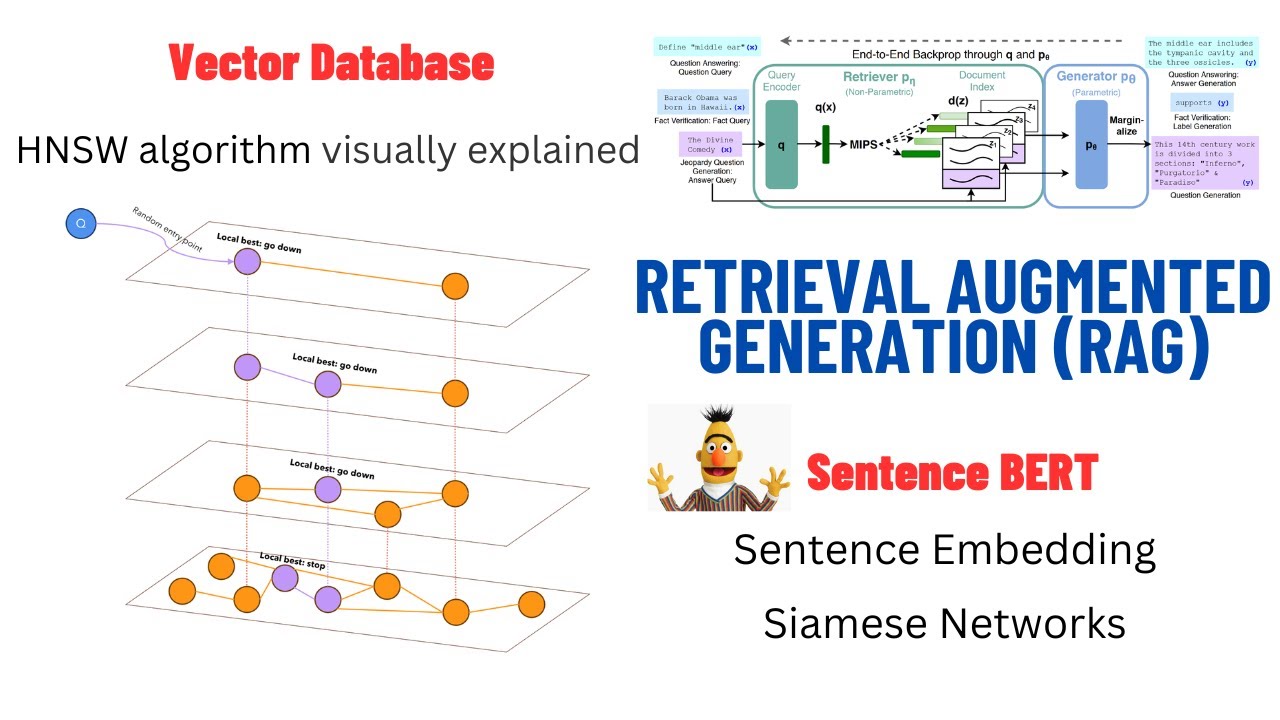

Discover how Retrieval Augmented Generation (RAG) works, including embeddings, Sentence BERT, and vector databases like HNSW. Unlock the full pipeline to enhance your AI projects!

Umar Jamil

80.6K views • Nov 27, 2023

About this video

Get your 5$ coupon for Gradient: https://gradient.1stcollab.com/umarjamilai

In this video we explore the entire Retrieval Augmented Generation pipeline. I will start by reviewing language models, their training and inference, and then explore the main ingredient of a RAG pipeline: embedding vectors. We will see what are embedding vectors, how they are computed, and how we can compute embedding vectors for sentences. We will also explore what is a vector database, while also exploring the popular HNSW (Hierarchical Navigable Small Worlds) algorithm used by vector databases to find embedding vectors given a query.

Download the PDF slides: https://github.com/hkproj/retrieval-augmented-generation-notes

Sentence BERT paper: https://arxiv.org/pdf/1908.10084.pdf

Chapters

00:00 - Introduction

02:22 - Language Models

04:33 - Fine-Tuning

06:04 - Prompt Engineering (Few-Shot)

07:24 - Prompt Engineering (QA)

10:15 - RAG pipeline (introduction)

13:38 - Embedding Vectors

19:41 - Sentence Embedding

23:17 - Sentence BERT

28:10 - RAG pipeline (review)

29:50 - RAG with Gradient

31:38 - Vector Database

33:11 - K-NN (Naive)

35:16 - Hierarchical Navigable Small Worlds (Introduction)

35:54 - Six Degrees of Separation

39:35 - Navigable Small Worlds

43:08 - Skip-List

45:23 - Hierarchical Navigable Small Worlds

47:27 - RAG pipeline (review)

48:22 - Closing

In this video we explore the entire Retrieval Augmented Generation pipeline. I will start by reviewing language models, their training and inference, and then explore the main ingredient of a RAG pipeline: embedding vectors. We will see what are embedding vectors, how they are computed, and how we can compute embedding vectors for sentences. We will also explore what is a vector database, while also exploring the popular HNSW (Hierarchical Navigable Small Worlds) algorithm used by vector databases to find embedding vectors given a query.

Download the PDF slides: https://github.com/hkproj/retrieval-augmented-generation-notes

Sentence BERT paper: https://arxiv.org/pdf/1908.10084.pdf

Chapters

00:00 - Introduction

02:22 - Language Models

04:33 - Fine-Tuning

06:04 - Prompt Engineering (Few-Shot)

07:24 - Prompt Engineering (QA)

10:15 - RAG pipeline (introduction)

13:38 - Embedding Vectors

19:41 - Sentence Embedding

23:17 - Sentence BERT

28:10 - RAG pipeline (review)

29:50 - RAG with Gradient

31:38 - Vector Database

33:11 - K-NN (Naive)

35:16 - Hierarchical Navigable Small Worlds (Introduction)

35:54 - Six Degrees of Separation

39:35 - Navigable Small Worlds

43:08 - Skip-List

45:23 - Hierarchical Navigable Small Worlds

47:27 - RAG pipeline (review)

48:22 - Closing

Tags and Topics

Browse our collection to discover more content in these categories.

Video Information

Views

80.6K

Likes

2.7K

Duration

49:24

Published

Nov 27, 2023

User Reviews

4.7

(16) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.