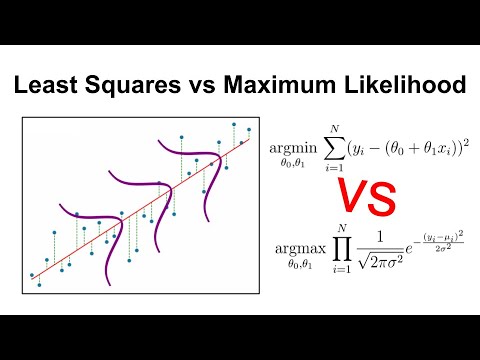

Least Squares vs Maximum Likelihood: Understanding the Connection

This video explains the relationship between the least squares method and the Gaussian distribution, highlighting how the assumptions underlying least squares relate to the properties of the Gaussian.

DataMListic

29.1K views • Jul 10, 2024

About this video

In this video, we explore why the least squares method is closely related to the Gaussian distribution. Simply put, this happens because it assumes that the errors or residuals in the data follow a normal distribution with a mean on the regression line.

*References*

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

Multivariate Normal (Gaussian) Distribution Explained: https://youtu.be/UVvuwv-ne1I

*Related Videos*

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

Why We Don't Use the Mean Squared Error (MSE) Loss in Classification: https://youtu.be/bNwI3IUOKyg

The Bessel's Correction: https://youtu.be/E3_408q1mjo

Gradient Boosting with Regression Trees Explained: https://youtu.be/lOwsMpdjxog

P-Values Explained: https://youtu.be/IZUfbRvsZ9w

Kabsch-Umeyama Algorithm: https://youtu.be/nCs_e6fP7Jo

Eigendecomposition Explained: https://youtu.be/ihUr2LbdYlE

*Contents*

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

00:00 - Intro

00:38 - Linear Regression with Least Squares

01:20 - Gaussian Distribution

02:10 - Maximum Likelihood Demonstration

03:23 - Final Thoughts

04:33 - Outro

*Follow Me*

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

🐦 Twitter: @datamlistic https://twitter.com/datamlistic

📸 Instagram: @datamlistic https://www.instagram.com/datamlistic

📱 TikTok: @datamlistic https://www.tiktok.com/@datamlistic

*Channel Support*

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

The best way to support the channel is to share the content. ;)

If you'd like to also support the channel financially, donating the price of a coffee is always warmly welcomed! (completely optional and voluntary)

► Patreon: https://www.patreon.com/datamlistic

► Bitcoin (BTC): 3C6Pkzyb5CjAUYrJxmpCaaNPVRgRVxxyTq

► Ethereum (ETH): 0x9Ac4eB94386C3e02b96599C05B7a8C71773c9281

► Cardano (ADA): addr1v95rfxlslfzkvd8sr3exkh7st4qmgj4ywf5zcaxgqgdyunsj5juw5

► Tether (USDT): 0xeC261d9b2EE4B6997a6a424067af165BAA4afE1a

#svd #singularvaluedecomposition #eigenvectors #eigenvalues #linearalgebra

*References*

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

Multivariate Normal (Gaussian) Distribution Explained: https://youtu.be/UVvuwv-ne1I

*Related Videos*

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

Why We Don't Use the Mean Squared Error (MSE) Loss in Classification: https://youtu.be/bNwI3IUOKyg

The Bessel's Correction: https://youtu.be/E3_408q1mjo

Gradient Boosting with Regression Trees Explained: https://youtu.be/lOwsMpdjxog

P-Values Explained: https://youtu.be/IZUfbRvsZ9w

Kabsch-Umeyama Algorithm: https://youtu.be/nCs_e6fP7Jo

Eigendecomposition Explained: https://youtu.be/ihUr2LbdYlE

*Contents*

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

00:00 - Intro

00:38 - Linear Regression with Least Squares

01:20 - Gaussian Distribution

02:10 - Maximum Likelihood Demonstration

03:23 - Final Thoughts

04:33 - Outro

*Follow Me*

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

🐦 Twitter: @datamlistic https://twitter.com/datamlistic

📸 Instagram: @datamlistic https://www.instagram.com/datamlistic

📱 TikTok: @datamlistic https://www.tiktok.com/@datamlistic

*Channel Support*

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

The best way to support the channel is to share the content. ;)

If you'd like to also support the channel financially, donating the price of a coffee is always warmly welcomed! (completely optional and voluntary)

► Patreon: https://www.patreon.com/datamlistic

► Bitcoin (BTC): 3C6Pkzyb5CjAUYrJxmpCaaNPVRgRVxxyTq

► Ethereum (ETH): 0x9Ac4eB94386C3e02b96599C05B7a8C71773c9281

► Cardano (ADA): addr1v95rfxlslfzkvd8sr3exkh7st4qmgj4ywf5zcaxgqgdyunsj5juw5

► Tether (USDT): 0xeC261d9b2EE4B6997a6a424067af165BAA4afE1a

#svd #singularvaluedecomposition #eigenvectors #eigenvalues #linearalgebra

Tags and Topics

Browse our collection to discover more content in these categories.

Video Information

Views

29.1K

Likes

1.3K

Duration

4:49

Published

Jul 10, 2024

User Reviews

4.6

(5) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.

Trending Now