Unlocking Deep Neuro-Evolution with Scaled Map-Elites 🚀

Discover how the innovative paper 'Scaling Map-Elites to Deep Neuro-Evolution' from GECCO 2020 advances evolutionary algorithms for deep learning. Presented by Cédric Colas and Joost Huizinga, explore new methods to enhance AI evolution efficiency and cap

Flowers INRIA

675 views • Jul 2, 2021

About this video

Video of the paper "Scaling Map-Elites to Deep Neuro-Evolution" presented at the GECCO conference 2020.

Authors:

Cédric Colas (INRIA);

Joost Huizinga (UberAI Labs);

Vashisht Madhavan (UberAI Labs);

Jeff Clune (UberAI Labs).

Abstract:

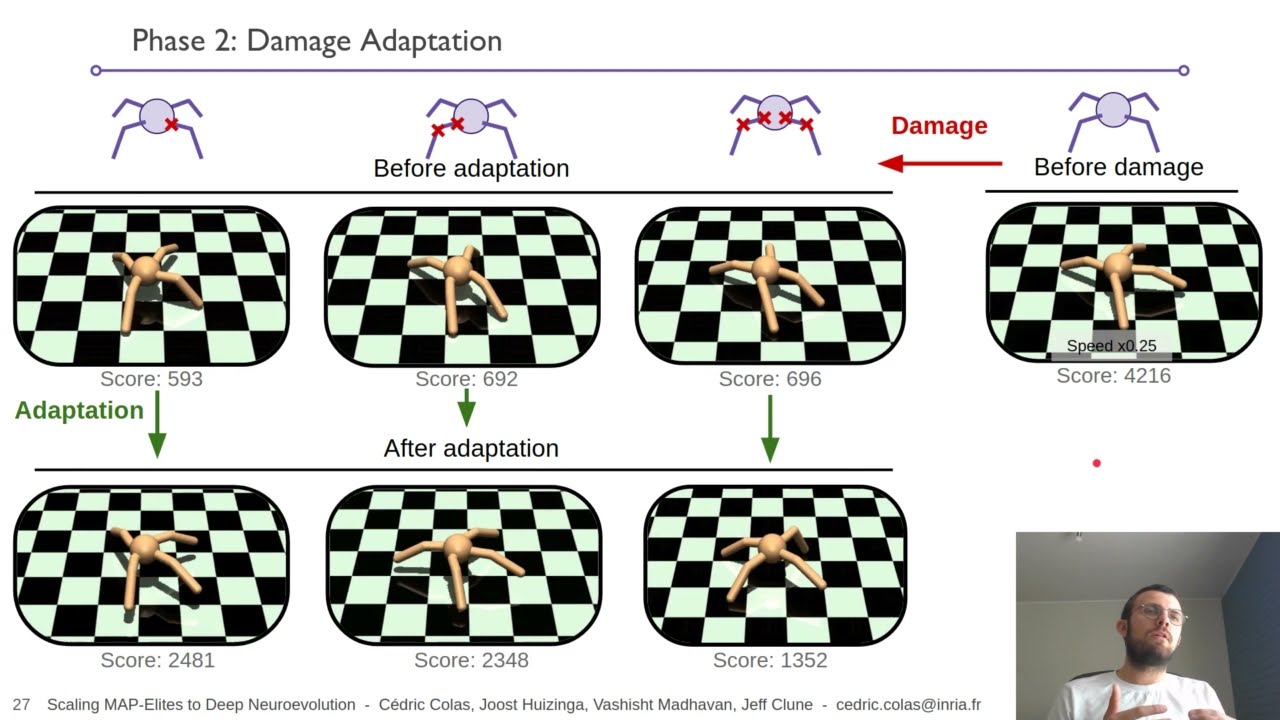

Quality-Diversity (QD) algorithms, and MAP-Elites (ME) in particular, have proven very useful for a broad range of applications including enabling real robots to recover quickly from joint damage, solving strongly deceptive maze tasks or evolving robot morphologies to discover new gaits. However, present implementations of ME and other QD algorithms seem to be limited to low-dimensional controllers with far fewer parameters than modern deep neural network models. In this paper, we propose to leverage the efficiency of Evolution Strategies (ES) to scale MAP-Elites to high-dimensional controllers parameterized by large neural networks. We design and evaluate a new hybrid algorithm called MAP-Elites with Evolution Strategies (ME-ES) for post-damage recovery in a difficult high-dimensional control task where traditional ME fails. Additionally, we show that ME-ES performs efficient exploration, on par with state-of-the-art exploration algorithms in high-dimensional control tasks with strongly deceptive rewards.

Links:

Article: https://arxiv.org/pdf/2003.01825.pdf

Slides: https://ccolas.github.io/data/slides_mees.pdf

Personal page (Cédric): https://ccolas.github.io/

Google scholar page: https://scholar.google.fr/citations?user=VBz8gZ4AAAAJ

Authors:

Cédric Colas (INRIA);

Joost Huizinga (UberAI Labs);

Vashisht Madhavan (UberAI Labs);

Jeff Clune (UberAI Labs).

Abstract:

Quality-Diversity (QD) algorithms, and MAP-Elites (ME) in particular, have proven very useful for a broad range of applications including enabling real robots to recover quickly from joint damage, solving strongly deceptive maze tasks or evolving robot morphologies to discover new gaits. However, present implementations of ME and other QD algorithms seem to be limited to low-dimensional controllers with far fewer parameters than modern deep neural network models. In this paper, we propose to leverage the efficiency of Evolution Strategies (ES) to scale MAP-Elites to high-dimensional controllers parameterized by large neural networks. We design and evaluate a new hybrid algorithm called MAP-Elites with Evolution Strategies (ME-ES) for post-damage recovery in a difficult high-dimensional control task where traditional ME fails. Additionally, we show that ME-ES performs efficient exploration, on par with state-of-the-art exploration algorithms in high-dimensional control tasks with strongly deceptive rewards.

Links:

Article: https://arxiv.org/pdf/2003.01825.pdf

Slides: https://ccolas.github.io/data/slides_mees.pdf

Personal page (Cédric): https://ccolas.github.io/

Google scholar page: https://scholar.google.fr/citations?user=VBz8gZ4AAAAJ

Video Information

Views

675

Likes

16

Duration

23:25

Published

Jul 2, 2021

Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.