Efficient Large-Scale Machine Learning with Conditional Gradient Algorithms 🚀

Discover how conditional gradient algorithms can optimize large-scale convex problems in machine learning, enabling faster and more scalable solutions for real-world applications.

IHES Institut des Hautes Études Scientifiques

34 views • Apr 16, 2013

About this video

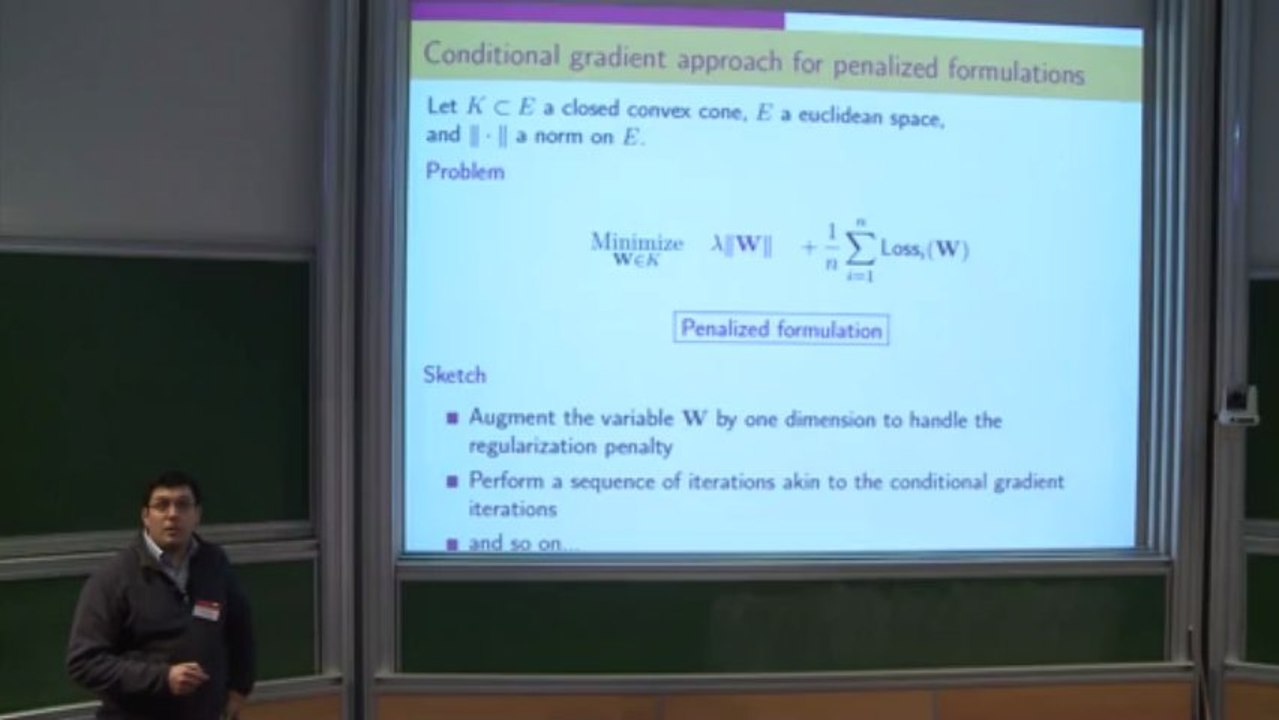

Large-scale learning with conditional gradient algorithms <br />We consider convex optimization problems arising in machine learning in large-scale settings. For several important learning problems, such as e.g. noisy matrix completion or multi-class classification, state-of-the-art optimization approaches such as composite minimization (a.k.a. proximal-gradient) algorithms are difficult to apply and do not scale up to large datasets. We propose three extensions of the conditional gradient algorithm (a.k.a. Frank-Wolfe's algorithm), suitable for large-scale problems, and establish their finite-time convergence guarantees. Promising experimental results are presented on large-scale real-world datasets.

Video Information

Views

34

Duration

27:24

Published

Apr 16, 2013

Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.