Why Deep Learning Works So Well 🤖

Explore why deep learning models perform remarkably well and how they learn in this insightful part 3 of the series.

Welch Labs

312.1K views • Aug 10, 2025

About this video

Take your personal data back with Incogni! Use code WELCHLABS and get 60% off an annual plan: http://incogni.com/welchlabs

New Patreon Rewards 33:31- own a piece of Welch Labs history!

https://www.patreon.com/welchlabs

Books & Posters

https://www.welchlabs.com/resources

Sections

0:00 - Intro

4:49 - How Incogni Saves Me Time

6:32 - Part 2 Recap

8:10 - Moving to Two Layers

9:15 - How Activation Functions Fold Space

11:45 - Numerical Walkthrough

13:42 - Universal Approximation Theorem

15:45 - The Geometry of Backpropagation

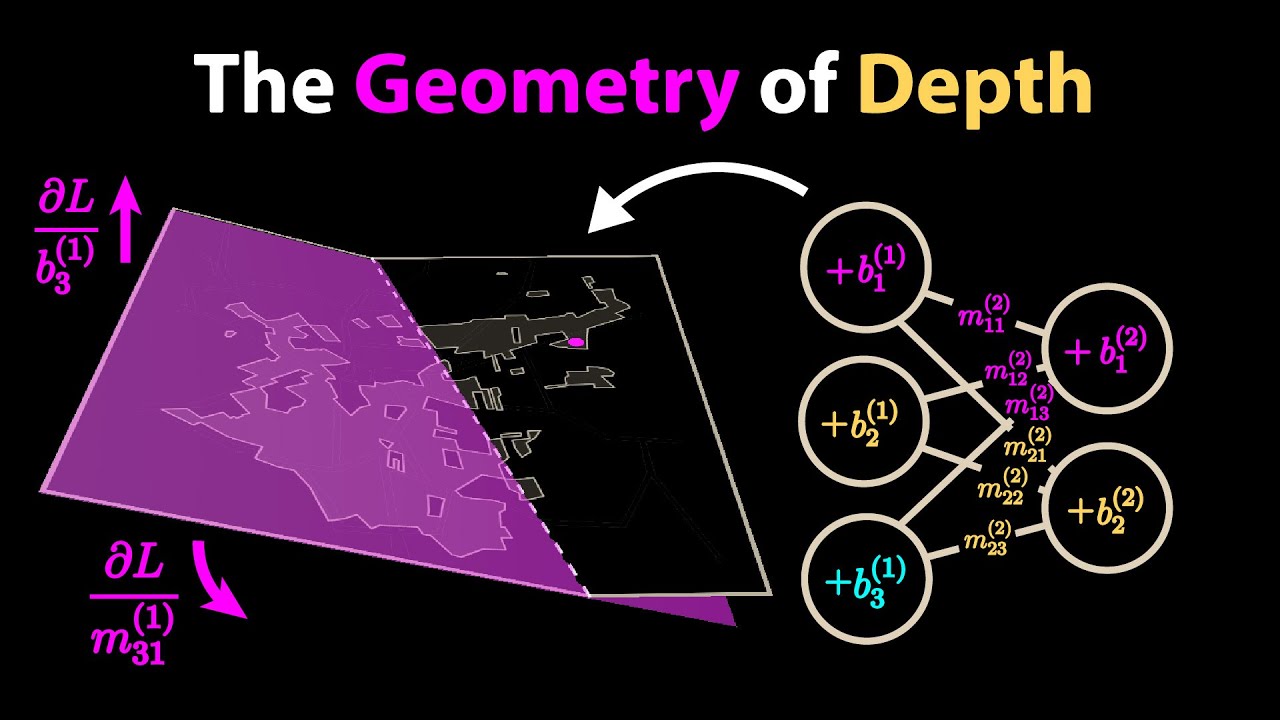

19:52 - The Geometry of Depth

24:27 - Exponentially Better?

30:23 - Neural Networks Demystifed

31:50 - The Time I Quit YouTube

33:31 - New Patreon Rewards!

Special Thanks to Patrons https://www.patreon.com/welchlabs

Juan Benet, Ross Hanson, Yan Babitski, AJ Englehardt, Alvin Khaled, Eduardo Barraza, Hitoshi Yamauchi, Jaewon Jung, Mrgoodlight, Shinichi Hayashi, Sid Sarasvati, Dominic Beaumont, Shannon Prater, Ubiquity Ventures, Matias Forti, Brian Henry, Tim Palade, Petar Vecutin, Nicolas baumann, Jason Singh, Robert Riley, vornska, Barry Silverman, Jake Ehrlich, Mitch Jacobs, Lauren Steely, Jeff Eastman, Rodolfo Ibarra, Clark Barrus, Rob Napier, Andrew White, Richard B Johnston, abhiteja mandava, Burt Humburg, Kevin Mitchell, Daniel Sanchez, Ferdie Wang, Tripp Hill, Richard Harbaugh Jr, Prasad Raje, Kalle Aaltonen, Midori Switch Hound, Zach Wilson, Chris Seltzer, Ven Popov, Hunter Nelson, Amit Bueno, Scott Olsen, Johan Rimez, Shehryar Saroya, Tyler Christensen, Beckett Madden-Woods, Darrell Thomas, Javier Soto

References

Simon Prince, Understanding Deep Learning. https://udlbook.github.io/udlbook/

Liang, Shiyu, and Rayadurgam Srikant. "Why deep neural networks for function approximation?." arXiv preprint arXiv:1610.04161 (2016).

Hanin, Boris, and David Rolnick. "Deep relu networks have surprisingly few activation patterns." *Advances in neural information processing systems* 32 (2019).

Hanin, Boris, and David Rolnick. "Complexity of linear regions in deep networks." *International Conference on Machine Learning*. PMLR, 2019.

Fan, Feng-Lei, et al. "Deep relu networks have surprisingly simple polytopes." *arXiv preprint arXiv:2305.09145* (2023).

All Code:

https://github.com/stephencwelch/manim_videos

100k neuron wide example training code: https://github.com/stephencwelch/manim_videos/blob/master/_2025/backprop_3/notebooks/Wide%20Training%20Example.ipynb

Written by: Stephen Welch

Produced by: Stephen Welch, Sam Baskin, and Pranav Gundu

Premium Beat IDs

EEDYZ3FP44YX8OWTe

MWROXNAY0SPXCMBS

New Patreon Rewards 33:31- own a piece of Welch Labs history!

https://www.patreon.com/welchlabs

Books & Posters

https://www.welchlabs.com/resources

Sections

0:00 - Intro

4:49 - How Incogni Saves Me Time

6:32 - Part 2 Recap

8:10 - Moving to Two Layers

9:15 - How Activation Functions Fold Space

11:45 - Numerical Walkthrough

13:42 - Universal Approximation Theorem

15:45 - The Geometry of Backpropagation

19:52 - The Geometry of Depth

24:27 - Exponentially Better?

30:23 - Neural Networks Demystifed

31:50 - The Time I Quit YouTube

33:31 - New Patreon Rewards!

Special Thanks to Patrons https://www.patreon.com/welchlabs

Juan Benet, Ross Hanson, Yan Babitski, AJ Englehardt, Alvin Khaled, Eduardo Barraza, Hitoshi Yamauchi, Jaewon Jung, Mrgoodlight, Shinichi Hayashi, Sid Sarasvati, Dominic Beaumont, Shannon Prater, Ubiquity Ventures, Matias Forti, Brian Henry, Tim Palade, Petar Vecutin, Nicolas baumann, Jason Singh, Robert Riley, vornska, Barry Silverman, Jake Ehrlich, Mitch Jacobs, Lauren Steely, Jeff Eastman, Rodolfo Ibarra, Clark Barrus, Rob Napier, Andrew White, Richard B Johnston, abhiteja mandava, Burt Humburg, Kevin Mitchell, Daniel Sanchez, Ferdie Wang, Tripp Hill, Richard Harbaugh Jr, Prasad Raje, Kalle Aaltonen, Midori Switch Hound, Zach Wilson, Chris Seltzer, Ven Popov, Hunter Nelson, Amit Bueno, Scott Olsen, Johan Rimez, Shehryar Saroya, Tyler Christensen, Beckett Madden-Woods, Darrell Thomas, Javier Soto

References

Simon Prince, Understanding Deep Learning. https://udlbook.github.io/udlbook/

Liang, Shiyu, and Rayadurgam Srikant. "Why deep neural networks for function approximation?." arXiv preprint arXiv:1610.04161 (2016).

Hanin, Boris, and David Rolnick. "Deep relu networks have surprisingly few activation patterns." *Advances in neural information processing systems* 32 (2019).

Hanin, Boris, and David Rolnick. "Complexity of linear regions in deep networks." *International Conference on Machine Learning*. PMLR, 2019.

Fan, Feng-Lei, et al. "Deep relu networks have surprisingly simple polytopes." *arXiv preprint arXiv:2305.09145* (2023).

All Code:

https://github.com/stephencwelch/manim_videos

100k neuron wide example training code: https://github.com/stephencwelch/manim_videos/blob/master/_2025/backprop_3/notebooks/Wide%20Training%20Example.ipynb

Written by: Stephen Welch

Produced by: Stephen Welch, Sam Baskin, and Pranav Gundu

Premium Beat IDs

EEDYZ3FP44YX8OWTe

MWROXNAY0SPXCMBS

Video Information

Views

312.1K

Likes

16.6K

Duration

34:09

Published

Aug 10, 2025

User Reviews

4.7

(62) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.