Understanding Word Embeddings in NLP 📚

Learn how word embeddings convert text into numbers, a key step in training NLP models like word2vec and LLMs.

Under The Hood

44.6K views • Feb 22, 2025

About this video

#word2vec #llm

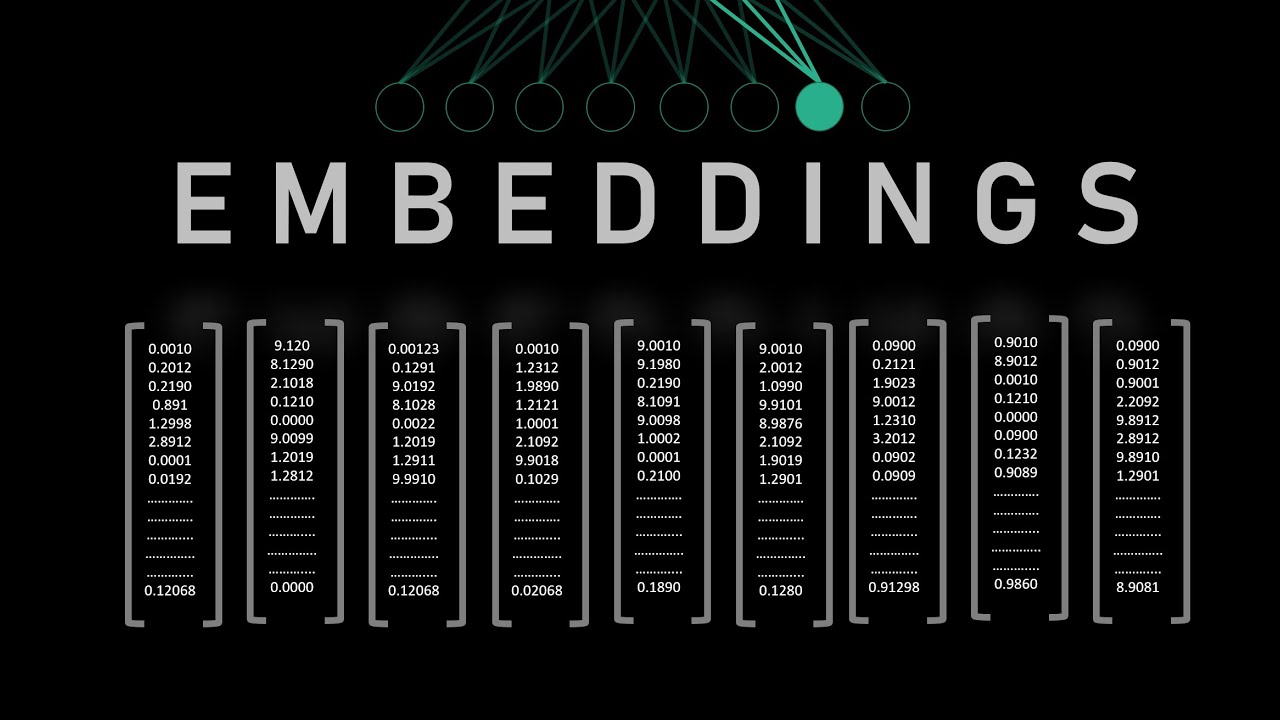

Converting text into numbers is the first step in training any machine learning model for NLP tasks. While one-hot encoding and bag of words provide simple ways to represent text as numbers, they lack semantic and contextual understanding of words, making them unsuitable for NLP tasks like language translation and text generation. Embeddings help represent words as vectors that capture their semantic meaning.

In this video, I provide a detailed explanation of embeddings and popular embedding techniques like Word2Vec, along with custom embeddings used in Transformer architectures for language generation and other NLP tasks.

Watch Videos in Understanding Large Language Model:

1) Introduction to Large Language Model

https://youtu.be/NLOBYtfdxuM?si=PyVqqNLFsbRPvBI6

2) Preparing dataset and Tokenization

https://youtu.be/bNjVxUDZQfM?si=81fHwXskdl9abdZy

Timestamp:

0:00 - Intro

0:20 - Representing image into numbers

0:54 - Representing text into numbers

2:20 - One Hot Encoding

3:40 - Bag of Words (Unigram, Bigram and N-Gram)

4:59 - Semantic and Contextual Understanding of text

6:28 - Word Embeddings

9:44 - Visualizing Word2Vec Embeddings

10:30 - Word2Vec Training (CBOW and Skip-Gram)

14:46 - Embedding Layer in Transformer Architecture

17:16 - Positional Encoding

18:46 - Outro

Efficient Estimation of Word Representations in Vector Space:

Word2Vec Paper: https://arxiv.org/abs/1301.3781

Visualize Word2Vec Embeddings Here:

https://projector.tensorflow.org/

Converting text into numbers is the first step in training any machine learning model for NLP tasks. While one-hot encoding and bag of words provide simple ways to represent text as numbers, they lack semantic and contextual understanding of words, making them unsuitable for NLP tasks like language translation and text generation. Embeddings help represent words as vectors that capture their semantic meaning.

In this video, I provide a detailed explanation of embeddings and popular embedding techniques like Word2Vec, along with custom embeddings used in Transformer architectures for language generation and other NLP tasks.

Watch Videos in Understanding Large Language Model:

1) Introduction to Large Language Model

https://youtu.be/NLOBYtfdxuM?si=PyVqqNLFsbRPvBI6

2) Preparing dataset and Tokenization

https://youtu.be/bNjVxUDZQfM?si=81fHwXskdl9abdZy

Timestamp:

0:00 - Intro

0:20 - Representing image into numbers

0:54 - Representing text into numbers

2:20 - One Hot Encoding

3:40 - Bag of Words (Unigram, Bigram and N-Gram)

4:59 - Semantic and Contextual Understanding of text

6:28 - Word Embeddings

9:44 - Visualizing Word2Vec Embeddings

10:30 - Word2Vec Training (CBOW and Skip-Gram)

14:46 - Embedding Layer in Transformer Architecture

17:16 - Positional Encoding

18:46 - Outro

Efficient Estimation of Word Representations in Vector Space:

Word2Vec Paper: https://arxiv.org/abs/1301.3781

Visualize Word2Vec Embeddings Here:

https://projector.tensorflow.org/

Tags and Topics

Browse our collection to discover more content in these categories.

Video Information

Views

44.6K

Likes

1.7K

Duration

19:33

Published

Feb 22, 2025

User Reviews

4.7

(8) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.

Trending Now