Unlocking Transformer Power: The Tradeoff Between Parallelism and Expressivity 🚀

Discover how circuit complexity reveals the capabilities and limitations of transformers, shedding light on their computational expressivity and tradeoffs in parallel processing. Join Will Merrill from NYU for an insightful deep dive!

Simons Institute for the Theory of Computing

1.3K views • Oct 15, 2024

About this video

Will Merrill (New York University)

https://simons.berkeley.edu/talks/will-merrill-new-york-university-2024-09-23

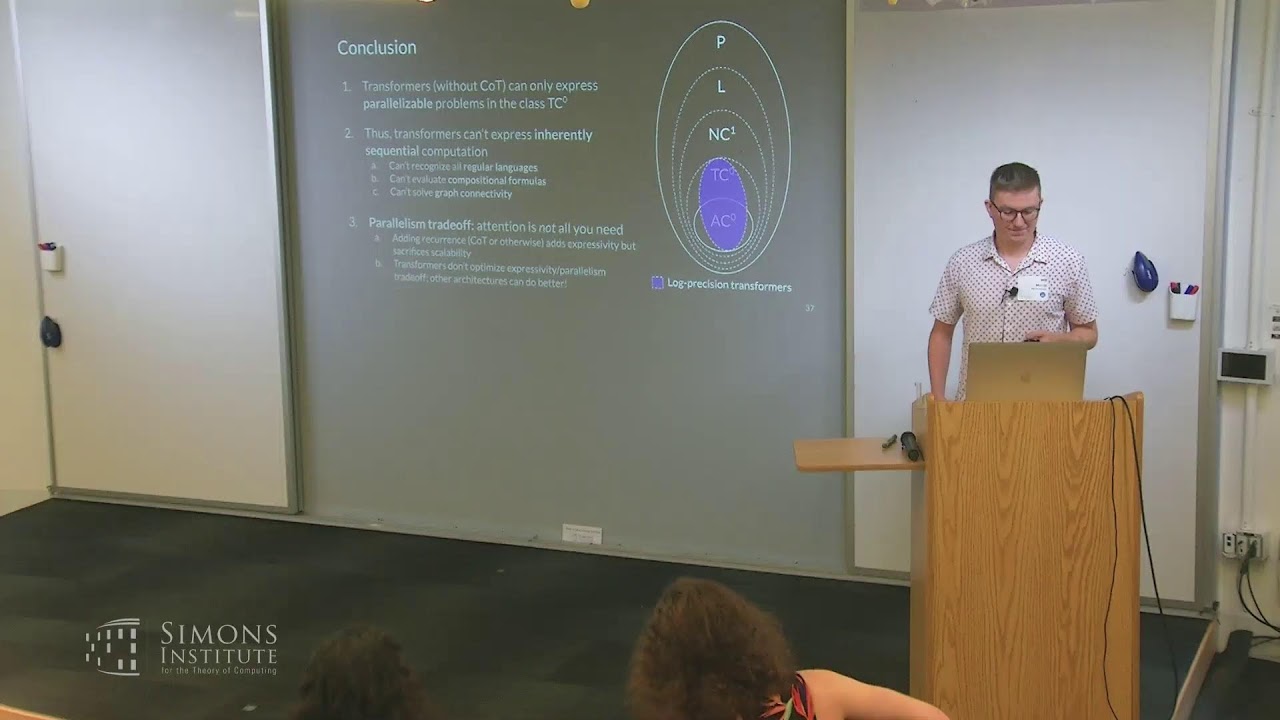

Transformers as a Computational Model

Despite their omnipresence in modern NLP, characterizing the computational power of transformer neural nets remains an interesting open question. We prove that transformers whose arithmetic precision is logarithmic in the number of input tokens (and whose feedforward nets are computable using space linear in their input) can be simulated by constant-depth logspace-uniform threshold circuits. This provides insight on the power of transformers using known results in complexity theory. For example, if L≠P (i.e., not all poly-time problems can be solved using logarithmic space), then transformers cannot even accurately solve linear equalities or check membership in an arbitrary context-free grammar with empty productions. Our result intuitively emerges from the transformer architecture's high parallelizability. We thus speculatively introduce the idea of a fundamental parallelism tradeoff: any model architecture as parallelizable as the transformer will obey limitations similar to it. Since parallelism is key to training models at massive scale, this suggests a potential inherent weakness of the scaling paradigm.

https://simons.berkeley.edu/talks/will-merrill-new-york-university-2024-09-23

Transformers as a Computational Model

Despite their omnipresence in modern NLP, characterizing the computational power of transformer neural nets remains an interesting open question. We prove that transformers whose arithmetic precision is logarithmic in the number of input tokens (and whose feedforward nets are computable using space linear in their input) can be simulated by constant-depth logspace-uniform threshold circuits. This provides insight on the power of transformers using known results in complexity theory. For example, if L≠P (i.e., not all poly-time problems can be solved using logarithmic space), then transformers cannot even accurately solve linear equalities or check membership in an arbitrary context-free grammar with empty productions. Our result intuitively emerges from the transformer architecture's high parallelizability. We thus speculatively introduce the idea of a fundamental parallelism tradeoff: any model architecture as parallelizable as the transformer will obey limitations similar to it. Since parallelism is key to training models at massive scale, this suggests a potential inherent weakness of the scaling paradigm.

Tags and Topics

Browse our collection to discover more content in these categories.

Video Information

Views

1.3K

Likes

42

Duration

45:13

Published

Oct 15, 2024

User Reviews

4.5

(1) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.