Tokenizing & Embedding in LLMs 🤖

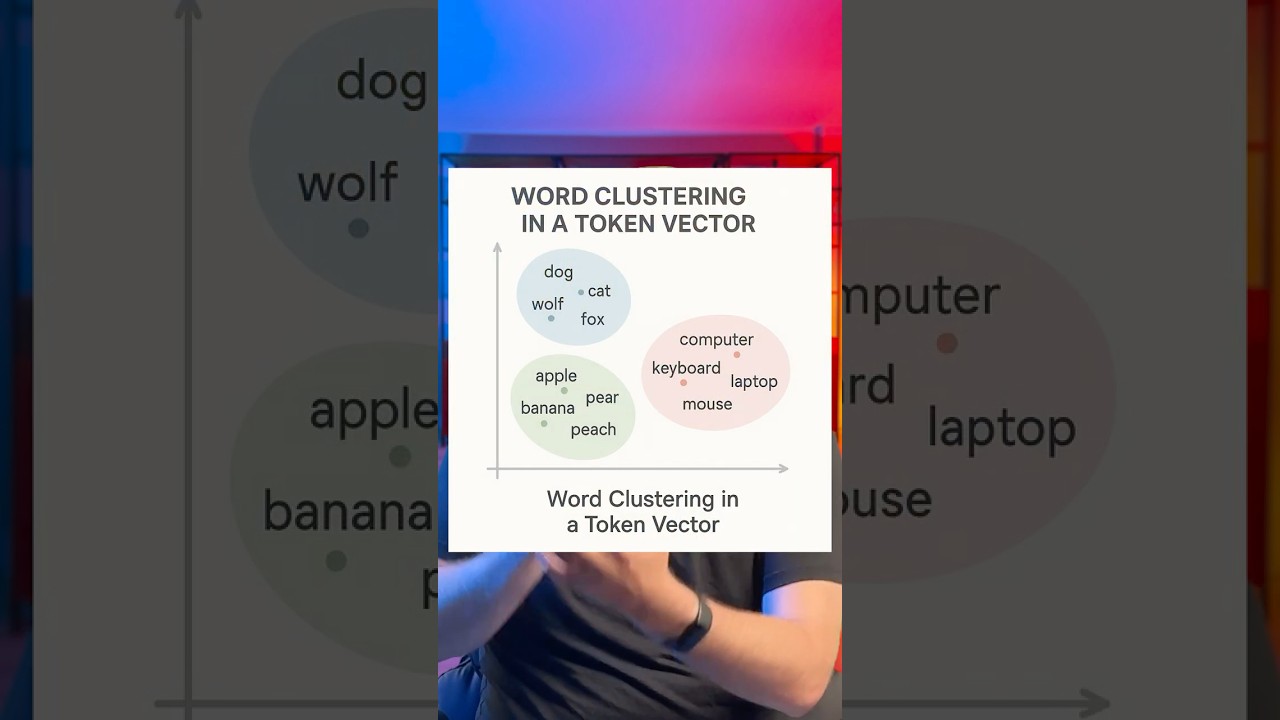

Learn how ChatGPT understands made-up words through tokenization, embeddings, and transformer math in large language models.

ExtrapoLytics

2.1K views • May 13, 2025

About this video

How does ChatGPT understand made-up words?

It’s not guessing, it’s using tokenization, embeddings, and transformer math.

If you're into AI, LLMs, or prompt engineering, this one’s for you.

#AI #ChatGPT #GPT4 #Tokenization #LLM #PromptEngineering #DeepLearning #MachineLearning #AIExplained #NLP

It’s not guessing, it’s using tokenization, embeddings, and transformer math.

If you're into AI, LLMs, or prompt engineering, this one’s for you.

#AI #ChatGPT #GPT4 #Tokenization #LLM #PromptEngineering #DeepLearning #MachineLearning #AIExplained #NLP

Video Information

Views

2.1K

Likes

70

Duration

2:44

Published

May 13, 2025

User Reviews

4.5

(2) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.

Trending Now