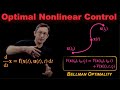

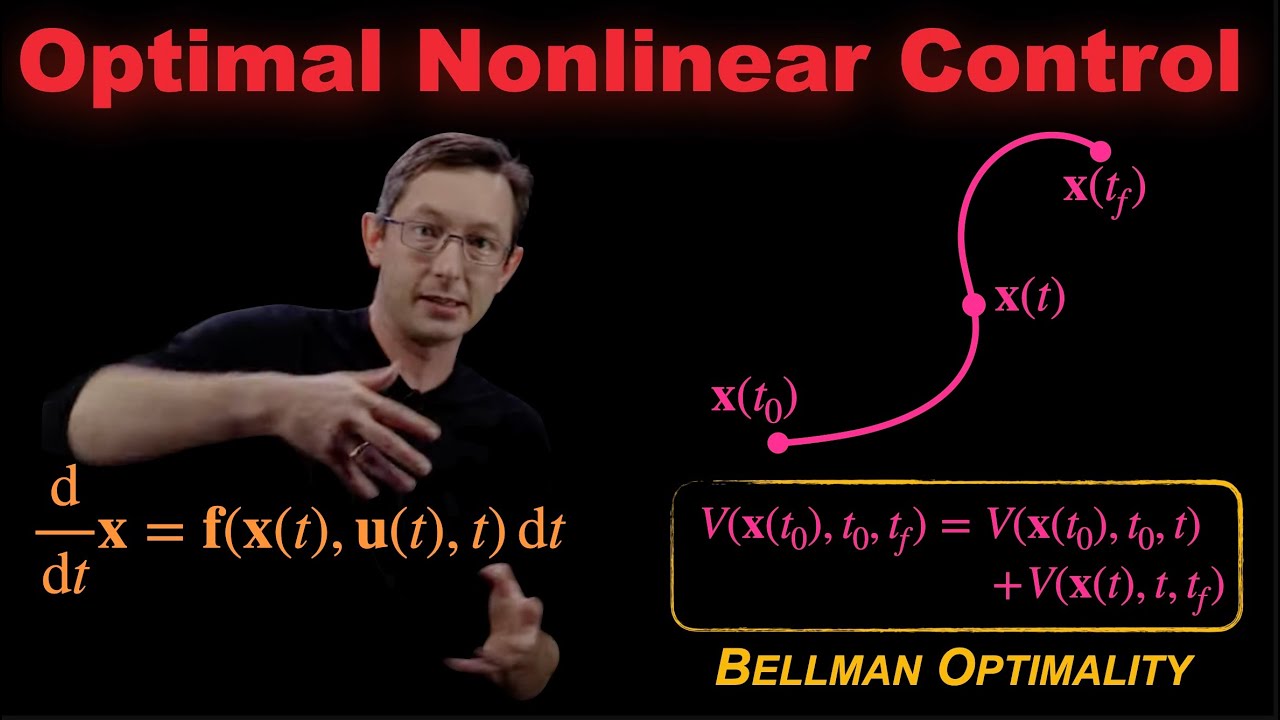

Nonlinear Control with HJB & Dynamic Programming

Learn about optimal nonlinear control using the Hamilton Jacobi Bellman (HJB) equation and dynamic programming methods. 🔍

Steve Brunton

100.1K views • Jan 28, 2022

About this video

This video discusses optimal nonlinear control using the Hamilton Jacobi Bellman (HJB) equation, and how to solve this using dynamic programming.

Citable link for this video: https://doi.org/10.52843/cassyni.4t5069

This is a lecture in a series on reinforcement learning, following the new Chapter 11 from the 2nd edition of our book "Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control" by Brunton and Kutz

Book Website: http://databookuw.com

Book PDF: http://databookuw.com/databook.pdf

Amazon: https://www.amazon.com/Data-Driven-Science-Engineering-Learning-Dynamical/dp/1108422098/

Brunton Website: eigensteve.com

This video was produced at the University of Washington

Citable link for this video: https://doi.org/10.52843/cassyni.4t5069

This is a lecture in a series on reinforcement learning, following the new Chapter 11 from the 2nd edition of our book "Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control" by Brunton and Kutz

Book Website: http://databookuw.com

Book PDF: http://databookuw.com/databook.pdf

Amazon: https://www.amazon.com/Data-Driven-Science-Engineering-Learning-Dynamical/dp/1108422098/

Brunton Website: eigensteve.com

This video was produced at the University of Washington

Video Information

Views

100.1K

Likes

2.1K

Duration

17:39

Published

Jan 28, 2022

User Reviews

4.7

(20) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.

Trending Now