Mastering Neural Networks: The Essential Guide to Single Perceptrons 🤖

Discover the fundamentals of neural networks by learning how a single perceptron functions. Perfect for beginners aiming to build a strong foundation in AI and machine learning!

Global Science Network

7.8K views • Nov 24, 2024

About this video

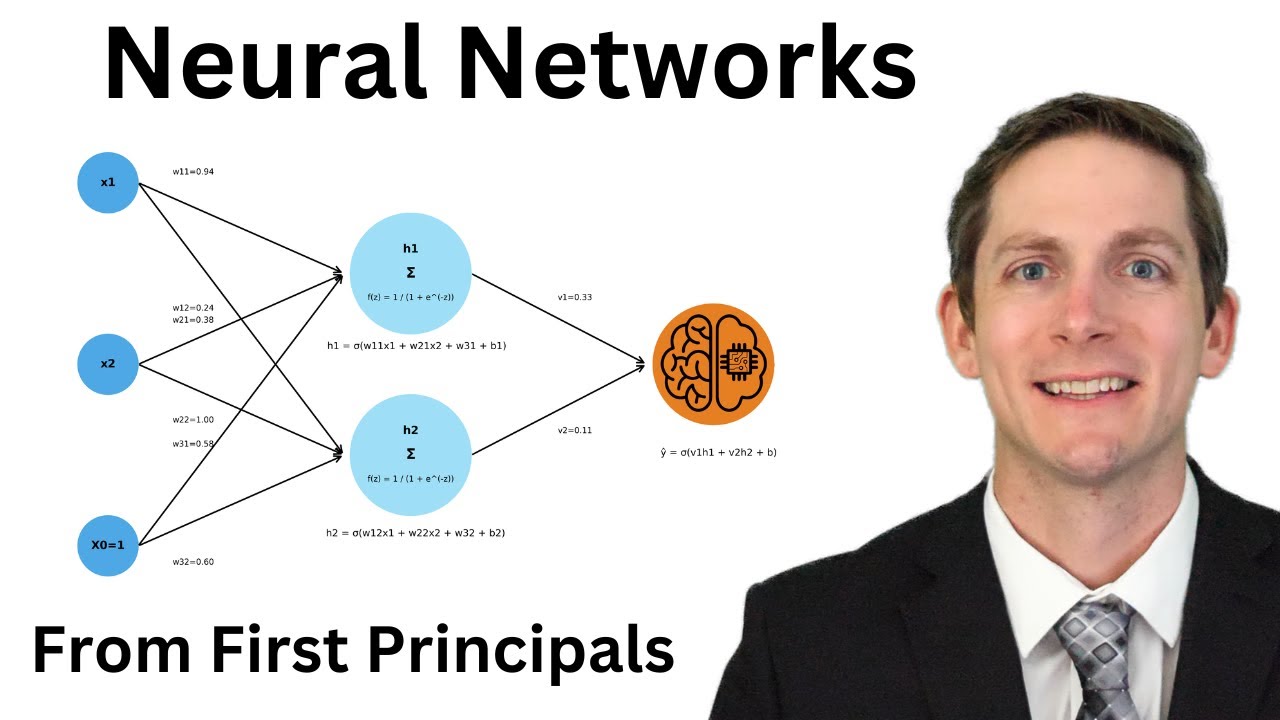

Understanding the fundamentals of neural networks is very important. For this reason, understanding how a single perception works is a good place to start.

A single perception can separate two linearly separable classes in 2D or 3D. This is done but using the perception learning algorithm, which is also called the perception learning rule, or just the delta rule.

When a given x1 and x1 input is given the perception can predict whether the output is going to be class 1 or class 2. If there is an error the weights and bias can be adjusted in a direction that will reduce the error. This is done over multiple epochs until the network is trained.

An example of a perceptron separating two classes of data is provided in 2D and 3D. This is done using the step activation function. An in-depth discussion about how the boundary layer gets adjusted is provided. We then look at how a single perceptron can solve some logic gate cases such as the AND, OR NAND, and NOR Gates. However, a multi-layer neural network will be needed to solve the XOR and XNOR cases.

Consider Supporting the Global Science Network.

Ko-fi (Preferred as there is zero platform fee for the transactions)

https://ko-fi.com/globalsciencenetwork

Patreon (If you prefer Patreon)

https://www.patreon.com/c/GlobalScienceNetwork

A single perception can separate two linearly separable classes in 2D or 3D. This is done but using the perception learning algorithm, which is also called the perception learning rule, or just the delta rule.

When a given x1 and x1 input is given the perception can predict whether the output is going to be class 1 or class 2. If there is an error the weights and bias can be adjusted in a direction that will reduce the error. This is done over multiple epochs until the network is trained.

An example of a perceptron separating two classes of data is provided in 2D and 3D. This is done using the step activation function. An in-depth discussion about how the boundary layer gets adjusted is provided. We then look at how a single perceptron can solve some logic gate cases such as the AND, OR NAND, and NOR Gates. However, a multi-layer neural network will be needed to solve the XOR and XNOR cases.

Consider Supporting the Global Science Network.

Ko-fi (Preferred as there is zero platform fee for the transactions)

https://ko-fi.com/globalsciencenetwork

Patreon (If you prefer Patreon)

https://www.patreon.com/c/GlobalScienceNetwork

Tags and Topics

Browse our collection to discover more content in these categories.

Video Information

Views

7.8K

Likes

402

Duration

14:34

Published

Nov 24, 2024

User Reviews

4.6

(1) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.