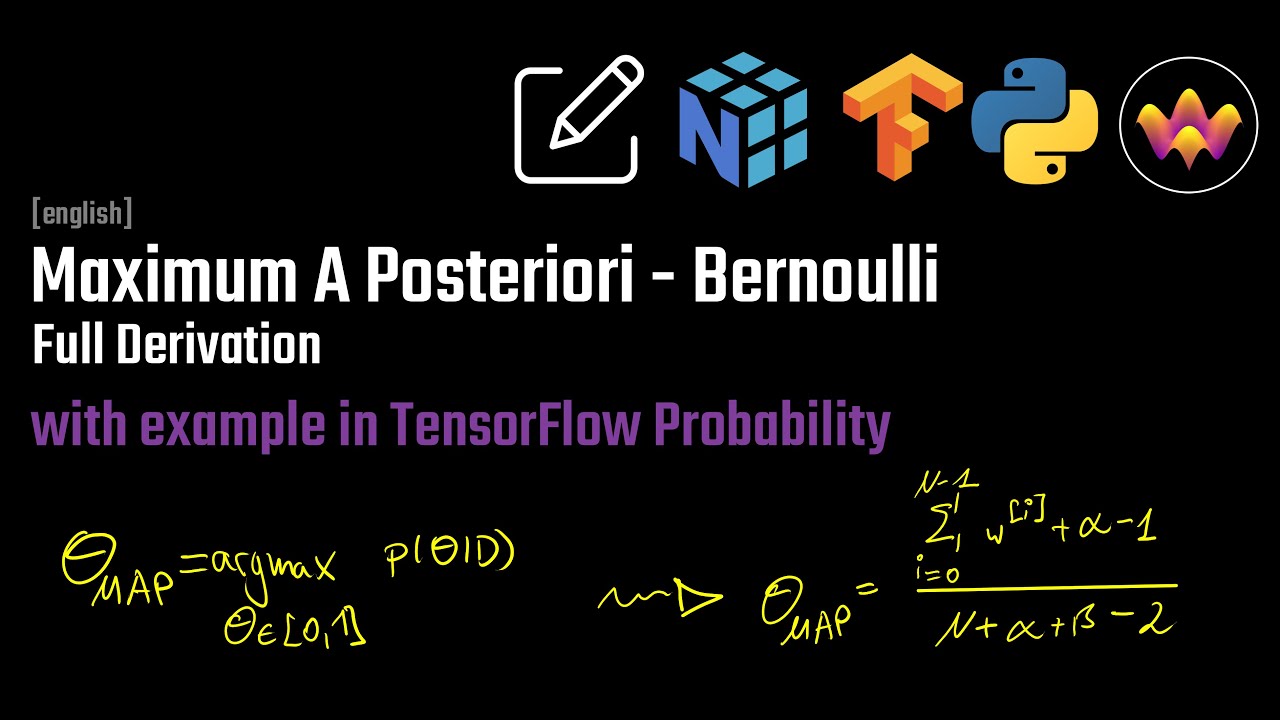

Maximum A Posteriori Estimate (MAP) for Bernoulli: Derivation and Implementation with TensorFlow Probability

This video explains the derivation of the Maximum A Posteriori Estimate (MAP) for Bernoulli distributions, highlighting how prior knowledge influences the estimate, and demonstrates its implementation using TensorFlow Probability.

Machine Learning & Simulation

5.0K views • Mar 18, 2021

About this video

In this video, we derive the Maximum A Posteriori Estimate (MAP). This estimate is not only based on the dataset, but also prior knowledge encoded in terms of the hyperparameter of the prior distribution over the parameters. It is therefore more robust against corrupt, noisy or incomplete data, but requires expert knowledge on the choice of the hyperparameters.

You can find the notes here: https://raw.githubusercontent.com/Ceyron/machine-learning-and-simulation/main/english/essential_pmf_pdf/bernoulli_maximum_a_posteriori_estimate.pdf

After the derivation, we then check our results in TensorFlow Probability with a clean and a corrupt dataset. In both cases, our informed MAP is superior over the uninformed MLE.

-------

📝 : Check out the GitHub Repository of the channel, where I upload all the handwritten notes and source-code files (contributions are very welcome): https://github.com/Ceyron/machine-learning-and-simulation

📢 : Follow me on LinkedIn or Twitter for updates on the channel and other cool Machine Learning & Simulation stuff: https://www.linkedin.com/in/felix-koehler and https://twitter.com/felix_m_koehler

💸 : If you want to support my work on the channel, you can become a Patreon here: https://www.patreon.com/MLsim

-------

Timestamps:

0:00 Opening

0:17 Intro

03:31 MLE vs MAP

07:20 Posterior

11:48 Log-Posterior

15:07 Maximizing the Log-Posterior

22:08 TensorFlow Probability

You can find the notes here: https://raw.githubusercontent.com/Ceyron/machine-learning-and-simulation/main/english/essential_pmf_pdf/bernoulli_maximum_a_posteriori_estimate.pdf

After the derivation, we then check our results in TensorFlow Probability with a clean and a corrupt dataset. In both cases, our informed MAP is superior over the uninformed MLE.

-------

📝 : Check out the GitHub Repository of the channel, where I upload all the handwritten notes and source-code files (contributions are very welcome): https://github.com/Ceyron/machine-learning-and-simulation

📢 : Follow me on LinkedIn or Twitter for updates on the channel and other cool Machine Learning & Simulation stuff: https://www.linkedin.com/in/felix-koehler and https://twitter.com/felix_m_koehler

💸 : If you want to support my work on the channel, you can become a Patreon here: https://www.patreon.com/MLsim

-------

Timestamps:

0:00 Opening

0:17 Intro

03:31 MLE vs MAP

07:20 Posterior

11:48 Log-Posterior

15:07 Maximizing the Log-Posterior

22:08 TensorFlow Probability

Tags and Topics

Browse our collection to discover more content in these categories.

Video Information

Views

5.0K

Likes

93

Duration

29:03

Published

Mar 18, 2021

User Reviews

4.6

(4) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.