Agent Arena by Gorilla X: Test AI Agents 🚀

Explore how AI agents perform in search, finance, RAG, and more in the Gorilla X Agent Arena. Compare and analyze their capabilities.

Shishir Girishkumar Patil

178 views • Oct 4, 2024

About this video

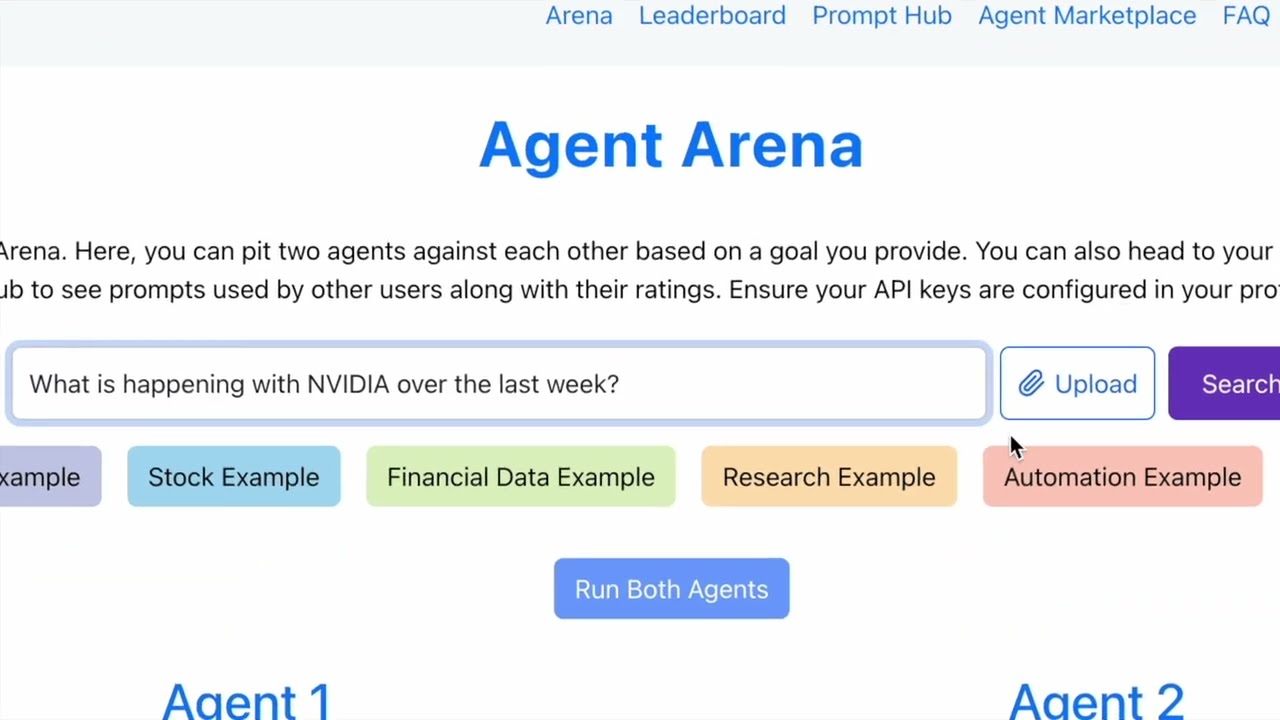

🚀 Introducing the Agent Arena by 🦍 Gorilla X LMSYS Chatbot Arena 🎯

How do different agents stack up in tasks like search, finance, RAG, and beyond? Which model is the most effective for agentic tasks? What tools do users prefer for enhanced agentic capabilities? Explore these questions and more!

✏️Blog: https://gorilla.cs.berkeley.edu/blogs/14_agent_arena.html

🏟️Arena: agent-arena.com

📊Leaderboard: agent-arena.com/leaderboard

⚱️2k pair-wise battles dataset: https://github.com/ShishirPatil/gorilla/tree/main/agent-arena#evaluation-directory

❓What model, framework, or tools do users prefer? Which agents excel at financial and numerical analysis? What are the best agents to find the "needle in the haystack" in massive corpuses of data? Which agents are best integrated with online platforms (Gmail, Yelp, etc)? Agents = LLMs + Tools + Frameworks. With Agent Arena, you can compare combinations of large language models, tools (like code interpreters and APIs), and frameworks (including LangChain, LlamaIndex, CrewAI) to find the best mix for your needs. With a novel ranking system, we evaluate agents based on their performance in real-time head-to-head tasks, tracking the strengths of individual components, and their combined strengths. This provides deeper insights into specific use-cases and allows users to see which agent performs best for their needs.

In a world of crowd-sourced evaluations, who evaluates the crowd? With Prompt-Hub, users can publish, upvote, and explore prompts used for agent evaluations, creating a collaborative space for the community.

From the Agent Arena team of Nithik Yekollu, Arth Bohra , Kai Wen, Sai Kolasani, Wei-Lin Chiang, Anastasios Angelopoulos, Prof. Joseph Gonzalez, Prof. Ion Stoica, Shishir G. Patil

Come see which agent rises to the top! 🎉

How do different agents stack up in tasks like search, finance, RAG, and beyond? Which model is the most effective for agentic tasks? What tools do users prefer for enhanced agentic capabilities? Explore these questions and more!

✏️Blog: https://gorilla.cs.berkeley.edu/blogs/14_agent_arena.html

🏟️Arena: agent-arena.com

📊Leaderboard: agent-arena.com/leaderboard

⚱️2k pair-wise battles dataset: https://github.com/ShishirPatil/gorilla/tree/main/agent-arena#evaluation-directory

❓What model, framework, or tools do users prefer? Which agents excel at financial and numerical analysis? What are the best agents to find the "needle in the haystack" in massive corpuses of data? Which agents are best integrated with online platforms (Gmail, Yelp, etc)? Agents = LLMs + Tools + Frameworks. With Agent Arena, you can compare combinations of large language models, tools (like code interpreters and APIs), and frameworks (including LangChain, LlamaIndex, CrewAI) to find the best mix for your needs. With a novel ranking system, we evaluate agents based on their performance in real-time head-to-head tasks, tracking the strengths of individual components, and their combined strengths. This provides deeper insights into specific use-cases and allows users to see which agent performs best for their needs.

In a world of crowd-sourced evaluations, who evaluates the crowd? With Prompt-Hub, users can publish, upvote, and explore prompts used for agent evaluations, creating a collaborative space for the community.

From the Agent Arena team of Nithik Yekollu, Arth Bohra , Kai Wen, Sai Kolasani, Wei-Lin Chiang, Anastasios Angelopoulos, Prof. Joseph Gonzalez, Prof. Ion Stoica, Shishir G. Patil

Come see which agent rises to the top! 🎉

Video Information

Views

178

Duration

0:35

Published

Oct 4, 2024

Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.