KNN vs Linear Models: Simple Comparison for Classification 📊

Discover the key differences between K-Nearest Neighbors and Linear Models like Linear and Logistic Regression. Perfect for data science interviews and understanding classification methods!

Tech - jroshan

1.7K views • May 21, 2025

About this video

🎯 KNN vs Linear Models for Classification – Explained Simply!

Comparing KNN - K-Nearest Neighbors with Linear Models Linear Regression & Logistic Regression, focused on classification tasks — with a clean structure.

🚀 Join Groups for latest Update and Notes:-

https://lnkd.in/dYh-u4wP

🧠 1. What is KNN?

🔹 K-Nearest Neighbors - KNN is a non-parametric algorithm that classifies a point based on the majority class among its K closest neighbors.

✅ No training - all computation happens during prediction

📍 Good for small datasets, nonlinear patterns

📈 2. What is a Linear Model?

Linear Regression: For continuous outputs - not for classification

Logistic Regression: For binary,multi-class classification

✅ Fast to train

📊 Assumes linear decision boundary

🚫 Not good for complex, nonlinear datasets

⚔️ KNN vs Logistic Regression - Classification

Feature , KNN , Logistic Regression

Type , Lazy / Non-parametric ,Eager / Parametric

Training Speed , Fast , Slower

Prediction Speed , Slow (computes distance) , Fast

Handles Non-linearity , ✅ Yes , ❌ No (unless polynomial features)

Interpretability , ❌ Harder to explain , ✅ Coefficients are meaningful

Sensitive to Noise , ✅ Yes , ❌ Less

🧪 Accuracy Tip

~ Use KNN when:

You have small data

Data is not linearly separable

Interpretability is less important

~ Use Logistic Regression when:

You want a fast, explainable model

Data is linearly separable

You care about model coefficients

🔍 Visual Example

KNN: Draws complex boundaries to adapt to data

Logistic Regression: Draws a straight line or plane

(You can include a plot showing decision boundaries)

Key Advantages:

🧠 Easy to implement: KNN is a simple algorithm to understand and implement, making it perfect for beginners.

🧠 Non-parametric: KNN doesn't assume any specific distribution for the data, making it flexible for various problem types.

🧠Handling non-linear relationships: KNN can capture complex relationships between features.

~ When to Use KNN:

1. Small to medium-sized datasets: KNN can be computationally expensive for large datasets.

2. Data with non-linear relationships: KNN excels in capturing complex patterns.

3. Classification and regression tasks: KNN can handle both types of problems.

Real-World Applications:

~ Customer segmentation: KNN can help identify customer groups based on behavior and demographics.

~ Recommendation systems: KNN can suggest products based on user preferences.

~ Medical diagnosis: KNN can aid in disease diagnosis by identifying similar patient profiles.

🧠 Common Challenges:

1. Choosing the right value of K: Finding the optimal K value is crucial for performance.

2. Handling high-dimensional data: KNN can suffer from the curse of dimensionality.

🧠 Best Practices:

~ Data preprocessing: Scale features and handle missing values.

~ Choose the right distance metric: Euclidean, Manhattan, or Minkowski distances can be used.

~ Experiment with different K values: Find the optimal K value using cross-validation.

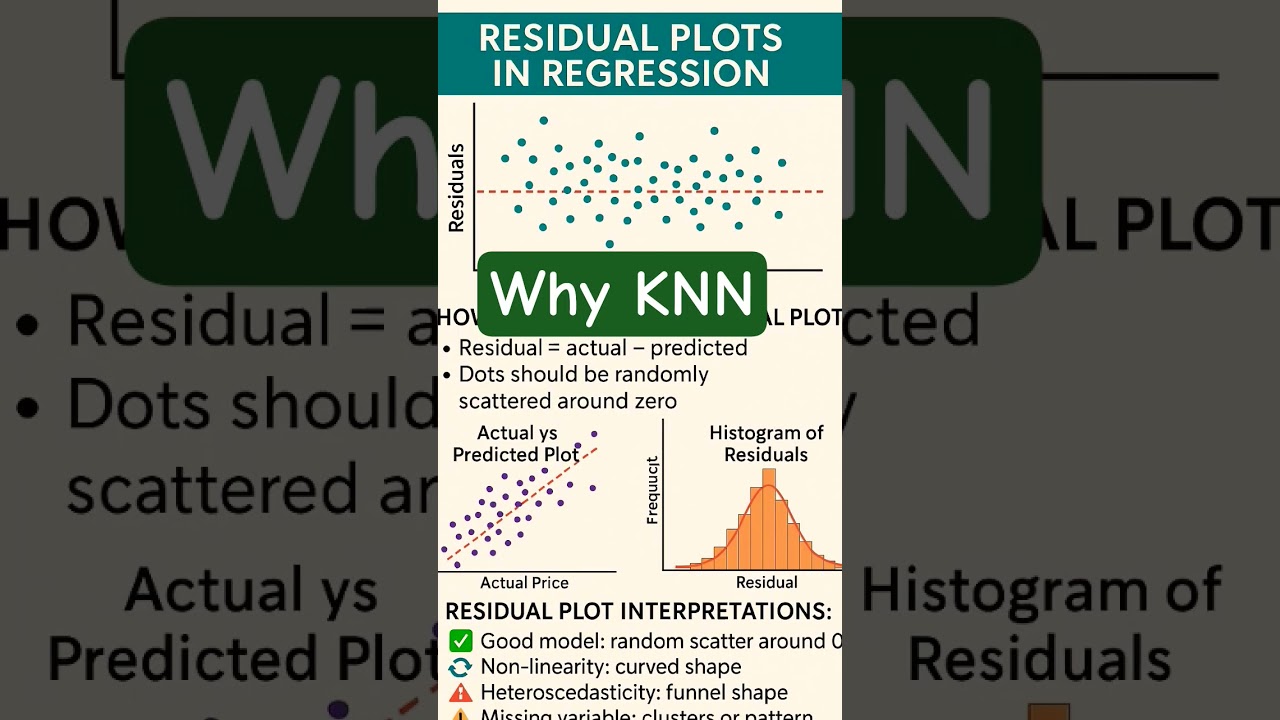

🔍 What is a Residual Plot?

A residual is the difference between the actual and predicted value:

Residual =y actual − ypredicted

~ If residuals are randomly scattered → good model fit.

~ If residuals show patterns → underfitting or non-linear relationship.

👇 Drop your thoughts or questions below. Let’s learn together!

If it is helpful please repost 📌

💥 follow Roshan Jha

🚀Join my YouTube channel for in-depth discussions

https://lnkd.in/gr4FGKtW

#KNN #MachineLearning #DataScience #Classification #Regression #Algorithms #DataAnalysis #LinkedInLearning #ProductAnalysis #CustomerSegmentation #RecommendationSystem #MedicalDiagnosis #Google #GenAi #DataAnalysis

Comparing KNN - K-Nearest Neighbors with Linear Models Linear Regression & Logistic Regression, focused on classification tasks — with a clean structure.

🚀 Join Groups for latest Update and Notes:-

https://lnkd.in/dYh-u4wP

🧠 1. What is KNN?

🔹 K-Nearest Neighbors - KNN is a non-parametric algorithm that classifies a point based on the majority class among its K closest neighbors.

✅ No training - all computation happens during prediction

📍 Good for small datasets, nonlinear patterns

📈 2. What is a Linear Model?

Linear Regression: For continuous outputs - not for classification

Logistic Regression: For binary,multi-class classification

✅ Fast to train

📊 Assumes linear decision boundary

🚫 Not good for complex, nonlinear datasets

⚔️ KNN vs Logistic Regression - Classification

Feature , KNN , Logistic Regression

Type , Lazy / Non-parametric ,Eager / Parametric

Training Speed , Fast , Slower

Prediction Speed , Slow (computes distance) , Fast

Handles Non-linearity , ✅ Yes , ❌ No (unless polynomial features)

Interpretability , ❌ Harder to explain , ✅ Coefficients are meaningful

Sensitive to Noise , ✅ Yes , ❌ Less

🧪 Accuracy Tip

~ Use KNN when:

You have small data

Data is not linearly separable

Interpretability is less important

~ Use Logistic Regression when:

You want a fast, explainable model

Data is linearly separable

You care about model coefficients

🔍 Visual Example

KNN: Draws complex boundaries to adapt to data

Logistic Regression: Draws a straight line or plane

(You can include a plot showing decision boundaries)

Key Advantages:

🧠 Easy to implement: KNN is a simple algorithm to understand and implement, making it perfect for beginners.

🧠 Non-parametric: KNN doesn't assume any specific distribution for the data, making it flexible for various problem types.

🧠Handling non-linear relationships: KNN can capture complex relationships between features.

~ When to Use KNN:

1. Small to medium-sized datasets: KNN can be computationally expensive for large datasets.

2. Data with non-linear relationships: KNN excels in capturing complex patterns.

3. Classification and regression tasks: KNN can handle both types of problems.

Real-World Applications:

~ Customer segmentation: KNN can help identify customer groups based on behavior and demographics.

~ Recommendation systems: KNN can suggest products based on user preferences.

~ Medical diagnosis: KNN can aid in disease diagnosis by identifying similar patient profiles.

🧠 Common Challenges:

1. Choosing the right value of K: Finding the optimal K value is crucial for performance.

2. Handling high-dimensional data: KNN can suffer from the curse of dimensionality.

🧠 Best Practices:

~ Data preprocessing: Scale features and handle missing values.

~ Choose the right distance metric: Euclidean, Manhattan, or Minkowski distances can be used.

~ Experiment with different K values: Find the optimal K value using cross-validation.

🔍 What is a Residual Plot?

A residual is the difference between the actual and predicted value:

Residual =y actual − ypredicted

~ If residuals are randomly scattered → good model fit.

~ If residuals show patterns → underfitting or non-linear relationship.

👇 Drop your thoughts or questions below. Let’s learn together!

If it is helpful please repost 📌

💥 follow Roshan Jha

🚀Join my YouTube channel for in-depth discussions

https://lnkd.in/gr4FGKtW

#KNN #MachineLearning #DataScience #Classification #Regression #Algorithms #DataAnalysis #LinkedInLearning #ProductAnalysis #CustomerSegmentation #RecommendationSystem #MedicalDiagnosis #Google #GenAi #DataAnalysis

Video Information

Views

1.7K

Duration

0:05

Published

May 21, 2025

User Reviews

3.7

(1) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.

Trending Now