Coin-Flipping in the Brain: Probabilistic Computation and Learning in the Assembly Model

An exploration of probabilistic computation and learning mechanisms in neural assemblies, focusing on how the brain employs coin-flipping strategies within the assembly model to facilitate decision-making and adaptation.

Simons Institute for the Theory of Computing

333 views • Aug 1, 2024

About this video

Santosh Vempala (Georgia Institute of Technology)

https://simons.berkeley.edu/talks/santosh-vempala-georgia-institute-technology-2024-06-28

Understanding Higher-Level Intelligence from AI, Psychology, and Neuroscience Perspectives

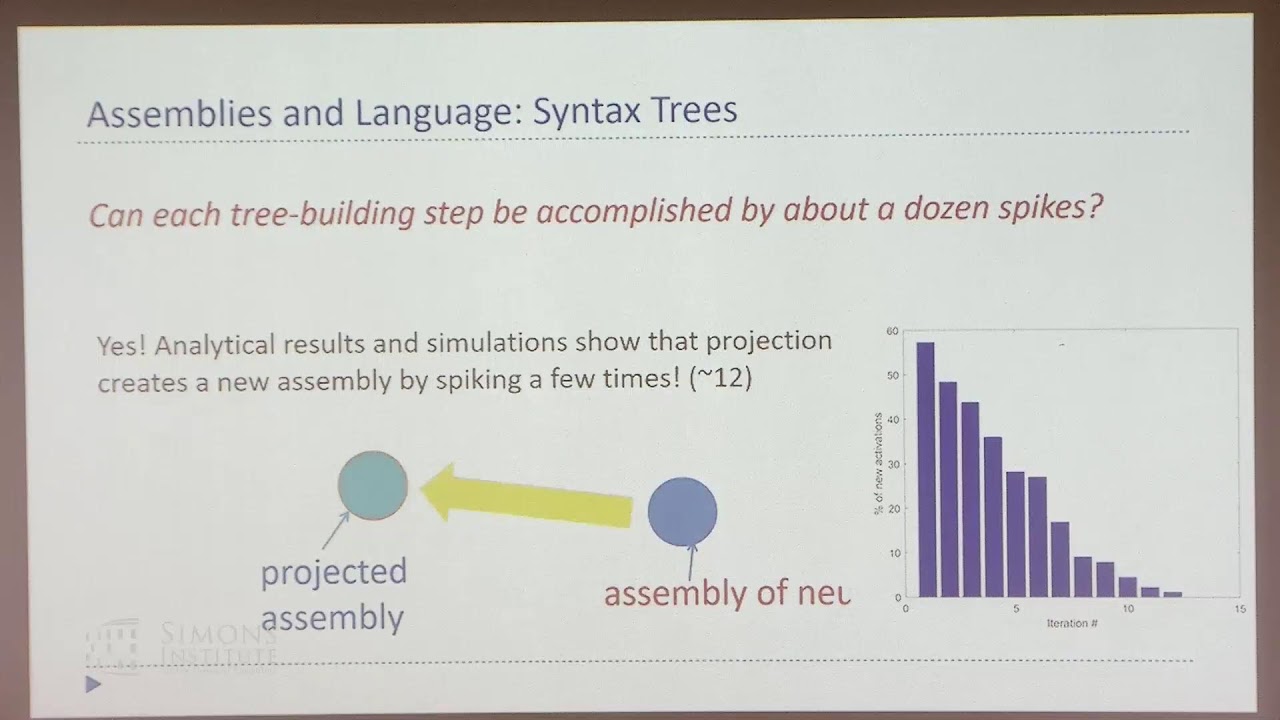

An important aspect of intelligence is dealing with uncertainty --- developing good predictions about one’s environment and converting these predictions into decisions. The brain itself appears to be noisy at many levels, from the chemical processes driving development and neuronal activity to trial variability in responses to stimuli. One hypothesis is that the noise inherent to the brain’s mechanisms is used to sample from a model of the world and generate predictions. To study this hypothesis, we study the emergence of statistical learning in a biologically plausible computational model of the brain based on stylized neurons and synapses, plasticity, and inhibition, and giving rise to assemblies --- groups of neurons whose coordinated firing is tantamount to recalling an object, location, concept, or other primitive item of cognition. We show in theory and in simulation that connections between assemblies record statistics, and ambient noise can be harnessed to make probabilistic choices between assemblies; statistical models such as Markov chains are learned entirely from the presentation of sequences of stimuli. Our results provide a foundation for biologically plausible probabilistic computation, and provide theoretical support to the hypothesis that noise is a useful component of the brain’s mechanism for cognition.

https://simons.berkeley.edu/talks/santosh-vempala-georgia-institute-technology-2024-06-28

Understanding Higher-Level Intelligence from AI, Psychology, and Neuroscience Perspectives

An important aspect of intelligence is dealing with uncertainty --- developing good predictions about one’s environment and converting these predictions into decisions. The brain itself appears to be noisy at many levels, from the chemical processes driving development and neuronal activity to trial variability in responses to stimuli. One hypothesis is that the noise inherent to the brain’s mechanisms is used to sample from a model of the world and generate predictions. To study this hypothesis, we study the emergence of statistical learning in a biologically plausible computational model of the brain based on stylized neurons and synapses, plasticity, and inhibition, and giving rise to assemblies --- groups of neurons whose coordinated firing is tantamount to recalling an object, location, concept, or other primitive item of cognition. We show in theory and in simulation that connections between assemblies record statistics, and ambient noise can be harnessed to make probabilistic choices between assemblies; statistical models such as Markov chains are learned entirely from the presentation of sequences of stimuli. Our results provide a foundation for biologically plausible probabilistic computation, and provide theoretical support to the hypothesis that noise is a useful component of the brain’s mechanism for cognition.

Tags and Topics

Browse our collection to discover more content in these categories.

Video Information

Views

333

Likes

13

Duration

42:35

Published

Aug 1, 2024

Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.