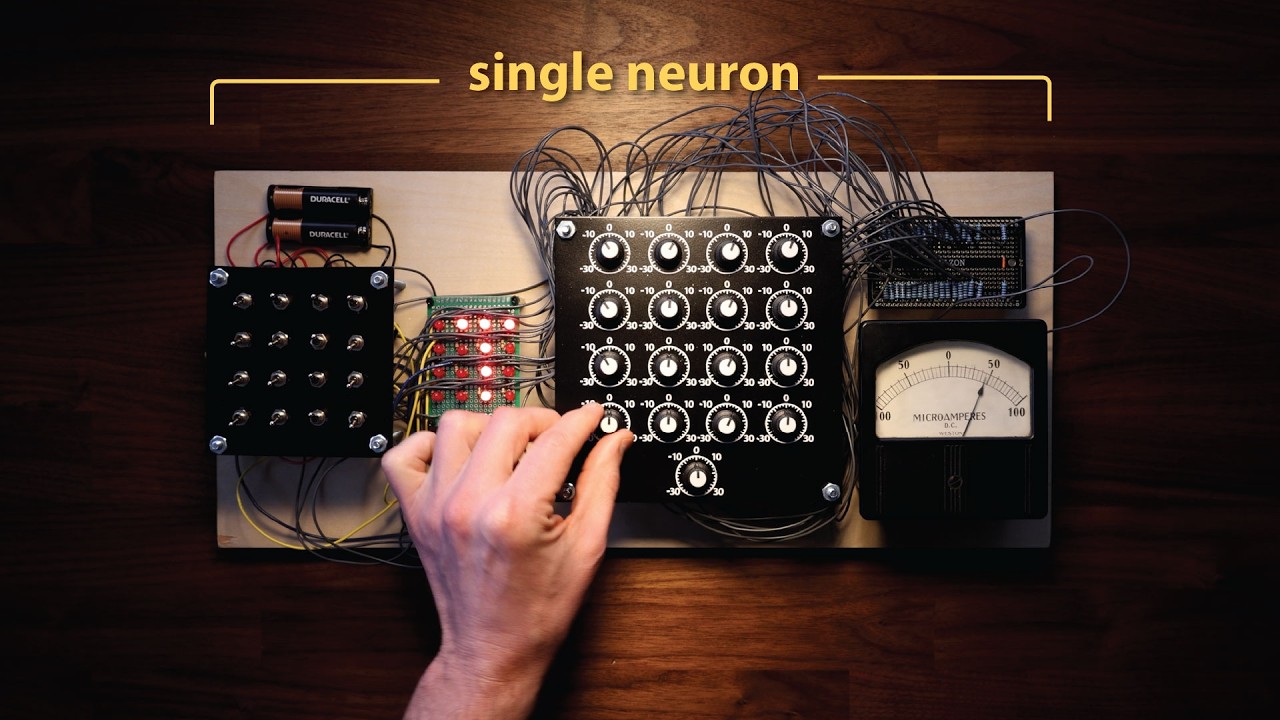

Discover the Hidden Tech Behind ChatGPT: The Perceptron 🤖

Uncover how 100 million perceptrons power ChatGPT and learn about the fascinating technology driving AI today. Plus, get $20 off your first AG1 subscription at https://drinkag1.com/welchlabs!

Welch Labs

730.6K views • Feb 1, 2025

About this video

Go to https://drinkag1.com/welchlabs to subscribe and save $20 off your first subscription of AG1! Thanks to AG1 for sponsoring today's video.

Imaginary Numbers book is back in stock! Update at 23:11

https://www.welchlabs.com/resources/imaginary-numbers-book

Welch Labs Posters:

https://www.welchlabs.com/resources

Cool Interactive Perceptron Simulator made by viewer Priyangsu Banerjee!

https://priyangsubanerjee.github.io/perceptron-simulator/

Special Thanks to Patrons https://www.patreon.com/welchlabs

Juan Benet, Ross Hanson, Yan Babitski, AJ Englehardt, Alvin Khaled, Eduardo Barraza, Hitoshi Yamauchi, Jaewon Jung, Mrgoodlight, Shinichi Hayashi, Sid Sarasvati, Dominic Beaumont, Shannon Prater, Ubiquity Ventures, Matias Forti, Brian Henry, Tim Palade, Petar Vecutin, Nicolas baumann, Jason Singh, Robert Riley, vornska, Barry Silverman

References

Rumelhart, D. E., Mcclelland, J. L. (1987). Parallel Distributed Processing, Volume 1: Explorations in the Microstructure of Cognition: Foundations. United Kingdom: Penguin Random House LLC.

Talking Nets: An Oral History of Neural Networks. (2000). United Kingdom: MIT Press.

Prince, S. J. (2023). Understanding Deep Learning. United Kingdom: MIT Press.

Crevier, D. (1993). AI : the tumultuous history of the search for artificial intelligence. New York: Basic Books.

Cat and dog face dataset: https://www.kaggle.com/datasets/andrewmvd/animal-faces?resource=download

Minsky, M., Papert, S. (2017). Perceptrons: An Introduction to Computational Geometry. United Kingdom: MIT Press.

Widrow, Bernard, and Michael A. Lehr. "30 years of adaptive neural networks: perceptron, madaline, and backpropagation." *Proceedings of the IEEE* 78.9 (1990): 1415-1442.

Olazaran, Mikel. "A sociological history of the neural network controversy." *Advances in computers*. Vol. 37. Elsevier, 1993. 335-425.

Widrow, Bernard. "Generalization and information storage in networks of adaline neurons." *Self-organizing systems* (1962): 435-461.

Widrow, Bernard. "Thinking about thinking: the discovery of the LMS algorithm." *IEEE Signal Processing Magazine* 22.1 (2005): 100-106.

Technical Notes

Method for counting neurons in ChatGPT: Starting with GPT-2 implementation here: https://github.com/karpathy/build-nanogpt/blob/master/train_gpt2.py - keys, queries, and values are implemented in Linear layers with n_embd inputs and 3*n_embd outputs, where n_embd is the embedding dimension. Output projection layer has n_embd and n_embd outputs. So a single attention layer will have ~4*n_embd neurons. GPT-3 has an embedding dimension of 12,288, so each attention layer has ~49,152 neurons. Each MLP block has n_embd inputs, 4*n_embd hidden units, and n_embd outputs, so ~5*n_embd total neurons, or ~61,440. Total neuron count for GPT-3 is then 96*(49,152+61,440)=10,616,832, ignoring initial embedding and final unembedding. Finally, GPT-4 reportedly has ~1.8 Trillion parameters (https://semianalysis.com/2023/07/10/gpt-4-architecture-infrastructure/), making it ~10x larger than GPT-3. Note that GPT-4 is reportedly a mixture of experts, and not all experts are used for each inference, so it appears that not all 1.8 trillion parameters are used for a given inference call. Assuming that ~10x the parameters means 10x the neurons, then GPT-4 should have ~100M neurons.

Imaginary Numbers book is back in stock! Update at 23:11

https://www.welchlabs.com/resources/imaginary-numbers-book

Welch Labs Posters:

https://www.welchlabs.com/resources

Cool Interactive Perceptron Simulator made by viewer Priyangsu Banerjee!

https://priyangsubanerjee.github.io/perceptron-simulator/

Special Thanks to Patrons https://www.patreon.com/welchlabs

Juan Benet, Ross Hanson, Yan Babitski, AJ Englehardt, Alvin Khaled, Eduardo Barraza, Hitoshi Yamauchi, Jaewon Jung, Mrgoodlight, Shinichi Hayashi, Sid Sarasvati, Dominic Beaumont, Shannon Prater, Ubiquity Ventures, Matias Forti, Brian Henry, Tim Palade, Petar Vecutin, Nicolas baumann, Jason Singh, Robert Riley, vornska, Barry Silverman

References

Rumelhart, D. E., Mcclelland, J. L. (1987). Parallel Distributed Processing, Volume 1: Explorations in the Microstructure of Cognition: Foundations. United Kingdom: Penguin Random House LLC.

Talking Nets: An Oral History of Neural Networks. (2000). United Kingdom: MIT Press.

Prince, S. J. (2023). Understanding Deep Learning. United Kingdom: MIT Press.

Crevier, D. (1993). AI : the tumultuous history of the search for artificial intelligence. New York: Basic Books.

Cat and dog face dataset: https://www.kaggle.com/datasets/andrewmvd/animal-faces?resource=download

Minsky, M., Papert, S. (2017). Perceptrons: An Introduction to Computational Geometry. United Kingdom: MIT Press.

Widrow, Bernard, and Michael A. Lehr. "30 years of adaptive neural networks: perceptron, madaline, and backpropagation." *Proceedings of the IEEE* 78.9 (1990): 1415-1442.

Olazaran, Mikel. "A sociological history of the neural network controversy." *Advances in computers*. Vol. 37. Elsevier, 1993. 335-425.

Widrow, Bernard. "Generalization and information storage in networks of adaline neurons." *Self-organizing systems* (1962): 435-461.

Widrow, Bernard. "Thinking about thinking: the discovery of the LMS algorithm." *IEEE Signal Processing Magazine* 22.1 (2005): 100-106.

Technical Notes

Method for counting neurons in ChatGPT: Starting with GPT-2 implementation here: https://github.com/karpathy/build-nanogpt/blob/master/train_gpt2.py - keys, queries, and values are implemented in Linear layers with n_embd inputs and 3*n_embd outputs, where n_embd is the embedding dimension. Output projection layer has n_embd and n_embd outputs. So a single attention layer will have ~4*n_embd neurons. GPT-3 has an embedding dimension of 12,288, so each attention layer has ~49,152 neurons. Each MLP block has n_embd inputs, 4*n_embd hidden units, and n_embd outputs, so ~5*n_embd total neurons, or ~61,440. Total neuron count for GPT-3 is then 96*(49,152+61,440)=10,616,832, ignoring initial embedding and final unembedding. Finally, GPT-4 reportedly has ~1.8 Trillion parameters (https://semianalysis.com/2023/07/10/gpt-4-architecture-infrastructure/), making it ~10x larger than GPT-3. Note that GPT-4 is reportedly a mixture of experts, and not all experts are used for each inference, so it appears that not all 1.8 trillion parameters are used for a given inference call. Assuming that ~10x the parameters means 10x the neurons, then GPT-4 should have ~100M neurons.

Video Information

Views

730.6K

Likes

27.4K

Duration

24:01

Published

Feb 1, 2025

User Reviews

4.8

(146) Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.

Trending Now