Understanding Neural Networks: From Neurons to Multi-Layer Perceptrons 🧠

Learn how neurons work and explore the evolution from single neurons to complex multi-layer neural networks in this comprehensive lecture.

AMILE - Machine Learning with Christian Nabert

635 views • Jan 31, 2021

About this video

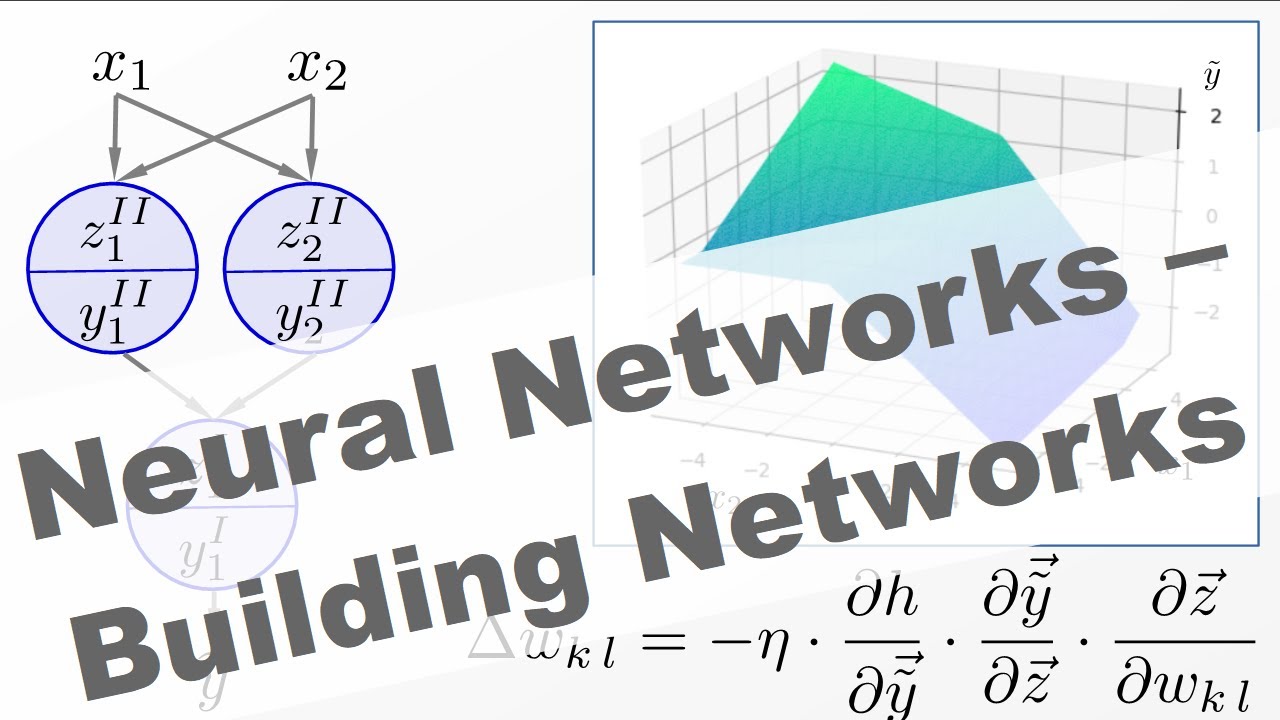

"What is a neuron and how does it work? From a single neuron to a layer of neurons to multiple layers of neurons."

___________________________________________

Subscribe the channel https://www.youtube.com/channel/UCgQlZ6kefvYeHDe__YkFluA/?sub_confirmation=1

___________________________________________

Part 1: Why Neural Networks for Machine Learning?

https://www.youtube.com/watch?v=NaYvohpr9No

Part 2: Building Neural Networks - Neuron, Single Layer Perceptron, Multi Layer Perceptron

https://www.youtube.com/watch?v=MqeGZcqkrn0

Part 3: Activation Function of Neural Networks - Step, Sigmoid, Tanh, ReLU, LeakyReLU, Softmax [

https://www.youtube.com/watch?v=rtinxohdo7Y

Part 4: How Neural Networks Really Work - From Logistic to Piecewise Linear Regression

https://www.youtube.com/watch?v=rtinxohdo7Y

Part 5: Delta Rule for Neural Network Training as Basis for Backpropagation

https://www.youtube.com/watch?v=wKbZkfuuQLw

Part 6: Derive Backpropagation Algorithm for Neural Network Training

https://www.youtube.com/watch?v=s1CFVeJHmQk

Part 7: Gradient Based Training of Neural Networks

https://www.youtube.com/watch?v=N8ZKqB19Vw4

___________________________________________

In this section, we build neural networks starting from a signle neuron. The input variables are scaled with multiplicative weights. An added bias term leads to the linear in a neuron. This result is modified non-linearly by an activation function. For a sigmoid activation function, a signle neuron is equivalent to logistic regression.

Placing neurons in parallel lead to a single layer perceptron (SLP). Stacking such layers of neurons together gives a multiple layer perceptron (MLP). The layers apart from the output layer are called hidden layers.The calculations in such neural networks can be written as matrix-vector multiplications. In this lecture, we consider fully connected feedforward neural networks without reconnecting neurons or shortcut connections.

___________________________________________

Subscribe the channel https://www.youtube.com/channel/UCgQlZ6kefvYeHDe__YkFluA/?sub_confirmation=1

___________________________________________

Part 1: Why Neural Networks for Machine Learning?

https://www.youtube.com/watch?v=NaYvohpr9No

Part 2: Building Neural Networks - Neuron, Single Layer Perceptron, Multi Layer Perceptron

https://www.youtube.com/watch?v=MqeGZcqkrn0

Part 3: Activation Function of Neural Networks - Step, Sigmoid, Tanh, ReLU, LeakyReLU, Softmax [

https://www.youtube.com/watch?v=rtinxohdo7Y

Part 4: How Neural Networks Really Work - From Logistic to Piecewise Linear Regression

https://www.youtube.com/watch?v=rtinxohdo7Y

Part 5: Delta Rule for Neural Network Training as Basis for Backpropagation

https://www.youtube.com/watch?v=wKbZkfuuQLw

Part 6: Derive Backpropagation Algorithm for Neural Network Training

https://www.youtube.com/watch?v=s1CFVeJHmQk

Part 7: Gradient Based Training of Neural Networks

https://www.youtube.com/watch?v=N8ZKqB19Vw4

___________________________________________

In this section, we build neural networks starting from a signle neuron. The input variables are scaled with multiplicative weights. An added bias term leads to the linear in a neuron. This result is modified non-linearly by an activation function. For a sigmoid activation function, a signle neuron is equivalent to logistic regression.

Placing neurons in parallel lead to a single layer perceptron (SLP). Stacking such layers of neurons together gives a multiple layer perceptron (MLP). The layers apart from the output layer are called hidden layers.The calculations in such neural networks can be written as matrix-vector multiplications. In this lecture, we consider fully connected feedforward neural networks without reconnecting neurons or shortcut connections.

Tags and Topics

Browse our collection to discover more content in these categories.

Video Information

Views

635

Likes

16

Duration

16:58

Published

Jan 31, 2021

Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.