AI LLM Benchmarking: Measuring Machine Intelligence

Compare large language models' progress as AI evolves rapidly through benchmarking.

ProGPT AI

28 views • Nov 23, 2025

About this video

AI LLM Benchmarking - comparing models

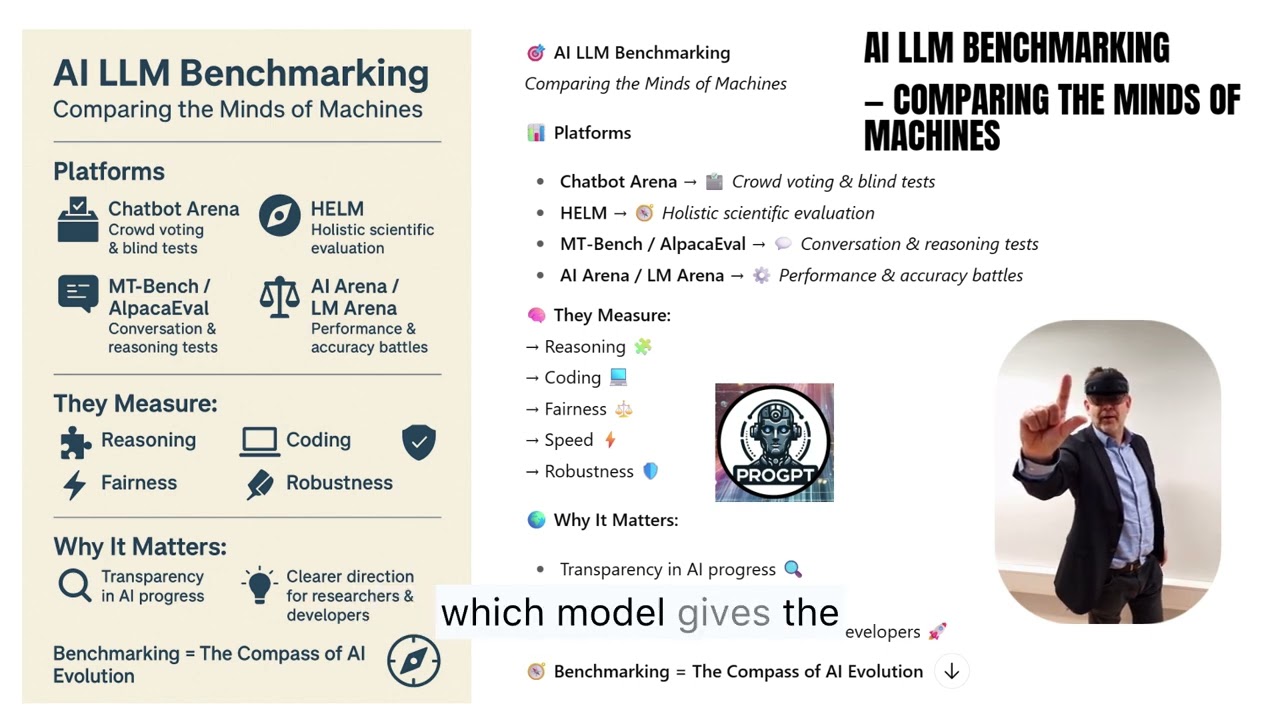

Large language models are evolving at lightning speed – and benchmarking is how we measure that progress. Benchmarking platforms like Chatbot Arena and HELM, and others enable researchers and users to compare the world's leading AI models – from GPT-5 and Claude to Gemini, Mistral, and open-source contenders.

Each platform has its own method:

Chatbot Arena (which publishes the LMSYS Leaderboard using Elo ratings) uses crowd-sourced blind tests where humans simply choose which model gives the better answer.

HELM (Holistic Evaluation of Language Models) takes a scientific approach, scoring models on fairness, efficiency, and robustness across many real-world tasks.

Others, like MT-Bench or AlpacaEval, focus on conversational ability, reasoning, or coding performance.

These benchmarks matter because they bring transparency, trust, and direction to AI development.

They help teams identify strengths and weaknesses, guide innovation, and ensure that progress in AI is not just faster – but also smarter and safer.In short: benchmarking is the compass that keeps AI evolution on course.

- Progpt.fi

--------------------

This way. Every day. Right here.

Edtech disruption in motion.

We build with AI to create what’s next.

We learn with AI to shape a wiser future.

For better choices. Better work. Better wellbeing.

For us. For humans. For tomorrow.

Progpt.fi

Large language models are evolving at lightning speed – and benchmarking is how we measure that progress. Benchmarking platforms like Chatbot Arena and HELM, and others enable researchers and users to compare the world's leading AI models – from GPT-5 and Claude to Gemini, Mistral, and open-source contenders.

Each platform has its own method:

Chatbot Arena (which publishes the LMSYS Leaderboard using Elo ratings) uses crowd-sourced blind tests where humans simply choose which model gives the better answer.

HELM (Holistic Evaluation of Language Models) takes a scientific approach, scoring models on fairness, efficiency, and robustness across many real-world tasks.

Others, like MT-Bench or AlpacaEval, focus on conversational ability, reasoning, or coding performance.

These benchmarks matter because they bring transparency, trust, and direction to AI development.

They help teams identify strengths and weaknesses, guide innovation, and ensure that progress in AI is not just faster – but also smarter and safer.In short: benchmarking is the compass that keeps AI evolution on course.

- Progpt.fi

--------------------

This way. Every day. Right here.

Edtech disruption in motion.

We build with AI to create what’s next.

We learn with AI to shape a wiser future.

For better choices. Better work. Better wellbeing.

For us. For humans. For tomorrow.

Progpt.fi

Tags and Topics

Browse our collection to discover more content in these categories.

Video Information

Views

28

Likes

4

Duration

1:04

Published

Nov 23, 2025

Related Trending Topics

LIVE TRENDSRelated trending topics. Click any trend to explore more videos.

Trending Now